Why PostgreSQL High Availability Matters and How to Achieve It

Ensuring your application can handle failures and outages is crucial, and the availability of your application is only as good as the availability of your PostgreSQL instance. With that in mind, you may be wondering which PostgreSQL high availability (HA) deployment option is best for your application.

Let’s review several popular solutions that increase the high availability of PostgreSQL deployments and, as a result, the availability overall of your application. Why several and not one? Well, there’s no silver bullet or one-size-fits-all solution when it comes to high availability and PostgreSQL. So, walk through the options for a highly available deployment of PostgreSQL and then you can make a choice that fits your use case.

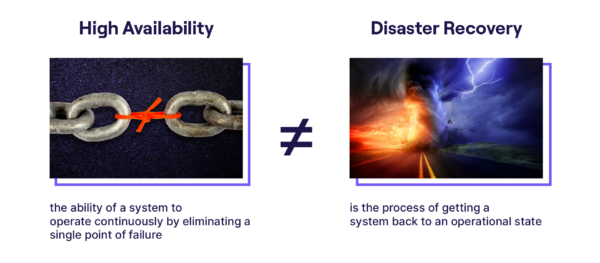

Difference Between High Availability and Disaster Recovery

High availability should not be confused with disaster recovery (DR). Both relate to and influence each other during an application’s design and development, but HA differs significantly from DR.

High availability refers to a system’s ability to operate continuously by removing the possibility of a single point of failure.

Disaster recovery is the process of getting the system back to normal operations after a failure or outage.

This article focuses on how you can make a PostgreSQL deployment highly available by eliminating a single point of failure. However, disaster recovery techniques are still relevant when discussing details of the failover process.

How to Measure High Availability

Sooner or later, every system experiences a failure or an outage. Even if a company claims that “our service is here for you 24×7”, in reality, that service runs on software and hardware that can’t provide the same guarantees. For instance, disks can be corrupted, or an application instance can crash due to a memory overflow caused by a sudden load spike.

How should high availability be measured, then? Well, it can be measured by defining and committing to a certain uptime that’s part of your availability SLA (Service Level Agreement).

If your 24×7 service guarantees three 9s (99.9%) of uptime, then it can still be unavailable up to 8.76 hours a year. If you commit to five 9s (99.999%) of uptime, your service can only be down 5.25 mins per year. This is a significant difference.

So how should you calculate those 9s for your use case? Should you provide three, four, or even six 9s of uptime?

The most accurate (and straightforward) way to calculate target uptime is to figure out your recovery point objective (RPO) and recovery time objective (RTO). Those two metrics will influence and eventually help you determine needed uptime.

Note: Even though RPO and RTO are characteristics and metrics for disaster recovery, you should still use them to determine your availability SLAs and uptime.

Recovery time objective (RTO) is the amount of time within which the service must be restored and returned to normal operations.

For some services, the RTO must be as low as a few seconds; while for others, it can be as much as several hours.

Recovery point objective (RPO) is the maximum acceptable amount of data loss after an incident/failure/outage. Usually, data loss is measured in bytes/megabytes/etc., by the number of lost transactions, or by the last seconds/minutes/etc. of changes.

For some services, there is no amount data loss that is acceptable (RPO=0), while for others, the loss of the last two minutes of data changes won’t disrupt the business or impact users.

Once you know your RTO and RPO, you can define the availability SLA for your application. And once you know your SLA, you can pick the most suitable PostgreSQL deployment option and maximize its high availability.

PostgreSQL Deployment Options for High Availability

Let’s review how to approach and increase high availability for the following PostgreSQL deployment options:

- Single database instance—This standard PostgreSQL deployment option has a single instance that handles all reads and writes.

- Single primary instance with read replicas— This deployment option has a single primary PostgreSQL instance and one or more read replicas. The primary serves both writes and reads, while the replicas get only the read traffic.

- Multi-master (sharded PostgreSQL with a coordinator)—Data is sharded across multiple standalone PostgreSQL instances (aka. nodes or workers). A coordinator node routes requests among those standalone instances.

- Multi-master (sharded PostgreSQL without the coordinator)—Data is distributed across multiple database nodes that can communicate with each other. In other words, it is a shared-nothing architecture without the coordinator node.

Let’s now look at how high availability is achieved or maximized for these PostgreSQL deployment options.

High Availability With Single PostgreSQL Database Instance

A single database instance is used when the compute (CPU) and capacity (storage, memory) resources are sufficient to handle the application read and write workloads.

To maximize high availability for this deployment option, the primary PostgreSQL instance needs to be complemented with standby servers. Changes are replicated from the primary to standbys, and a failover/failback solution simplifies the switch between the servers during an outage.

The primary and standby instances are your ordinary PostgreSQL servers. So, there’s nothing more to elaborate on here. However, the replication and failover/failback solutions depend on your RTO, RPO, availability SLA, and preferences. So let’s take a look at those.

PostgreSQL Replication Solutions

PostgreSQL offers many replication solutions. Two commonly used ones are streaming and logical replication.

With streaming replication, the write-ahead-log (WAL) records are shipped from the primary to standby instances. The changes are replicated for the entire database; you cannot filter out specific tables. The configuration of the primary and standbys must be the same.Logical replication also works with the WAL records of the primary instance. But, instead of sending the changes as-is in the binary format, the WAL records are decoded into logical changes that are then replicated to the standbys. You can pick which tables to replicate, and the primary and standbys configuration doesn’t need to be the same.

Both streaming and logical replication support several synchronization modes that you can pick based on your RPO, RTO and performance requirements.

A Chat About PostgreSQL Replication Modes

The first three modes from the top are used if changes from the primary to standbys need to be replicated synchronously. For instance, with the remote_write mode, the primary waits while standbys acknowledge that a change is written to their file systems, but has not necessarily reached durable storage.

With the synchronous_commit=on mode, the primary waits while the change is flushed to the durable storage of the standbys. The remote_apply mode provides the guarantees of the previous two, plus ensures that the change will be visible immediately to all of the queries executed on the standbys.

If you’re RPO=0, meaning no data loss is acceptable, then changes must be replicated in the synchronous_commit=on or synchronous_commit=remote_apply.

If the RPO > 0, you can improve performance by using either the synchronous remote_write mode or two remaining asynchronous modes – local and off. The local mode waits while the change is flushed to durable storage on the primary instance, but doesn’t wait for an acknowledgment from the standbys. The off mode doesn’t even wait while the change is flushed to disk on the primary.

Failover and Failback Solutions for PostgreSQL

Having standbys that receive updates from the primary improves high availability by removing the single point of failure represented by the primary. The standbys can take over if the primary becomes unavailable.

The process for promoting a standby to the new primary (in case the current primary has failed) is known as the failover. After restoring the failed primary, you can instantiate a reverse process called failback to reinstate it as the main instance.

Failover and failback do not happen instantly. The duration of the failover and failback procedures affects your RTO and uptime. Therefore, automating these procedures as much as possible is crucial for improving their efficiency.

Pgpool and Patroni are widely used to automate failover and failback processes. We’ll discuss Patroni later in relation to the multi-master deployments with a coordinator. As for Pgpool, let’s see how it can be used by the deployments with a single primary and one or more standbys.

A Sample Configuration for High Availability With Single PostgreSQL Database Instance

Let’s combine all the pieces and look at a sample, highly available (HA) configuration for deployments with a single primary instance. Assume that your solution is deployed in one of the regions of a public cloud environment:

The client application connects to a virtual IP address whose configuration supports the sending of traffic across zone A and B. During normal operations, the virtual IP forwards requests to the active instance of Pgpool in availability zone A. The primary PostgreSQL instance runs in the same zone and receives requests from the abovementioned Pgpool instance.

The standby instance is located in availability zone B. Because the current solution requires RPO=0, the replication from the primary to standby is synchronous (NOTE: For the synchronous replication, you should have 2+ standby, otherwise, the primary will get stuck if a single standby instance becomes unavailable).

A highly available architecture assumes that any single points of failure will be eliminated, which is why the primary Pgpool instance from zone A is complemented with a standby one in zone B. The watchdog instances of Pgpool work in both zones and monitor the healthiness of the Pgpool processes.

Now, imagine that the primary PostgreSQL instance goes down. If that happens, the active Pgpool instance in zone A will failover to the standby PostgreSQL instance in zone B. If the entire zone A goes down, the virtual IP can be reassigned to the standby Pgpool instance in zone B, and that instance will forward all requests to the standby PostgreSQL instance within the same zone.

High Availability With Single Primary Instance and Read Replicas

The previous deployment option uses the standby PostgreSQL instances to improve availability. These instances can either remain isolated from all application workloads and wait to be promoted as a primary during an outage, or they can be utilized as read replica instances to scale reads.

An HA solution for deployments with read replicas is not much different from deployments utilizing standbys:

Similar to the deployment with standbys, a primary PostgreSQL instance manages all writes and replicates changes to read replicas. In addition, failover/failback solutions can promote a replica to a new primary. The difference lies in the use of a load balancer that distributes read traffic between the primary and replica instances.As with the failover/failback solution, there are many options for the load balancing solution. Consider HAProxy, Pgpool, or a cloud native load balancer if you want to deploy PostgreSQL in the cloud.

A Sample Configuration for High Availability With Single Primary Instance and Read Replicas

As long as Pgpool can be used for failover, load balancing, and connection pooling, let’s continue using that tool for the sample HA setup of the deployment with a read replica.

The client application connects to the active Pgpool instance via a virtual IP address. That Pgpool instance sends all the writes to the primary PostgreSQL instance, but it can also load balance reads across both the primary and replica.

The client application connects to the active Pgpool instance via a virtual IP address. That Pgpool instance sends all the writes to the primary PostgreSQL instance, but it can also load balance reads across both the primary and replica.Depending on the replication mode between the primary and replica, reads from the replica can return the latest data or have a data lag. You select between synchronous and asynchronous replication modes based on your RPO, RTO, and other requirements.

The failover/failback setup is similar to the one used for the deployment with standbys discussed earlier.

High Availability With Multi-Master Deployments With Coordinator

You use sharding when a single PostgreSQL primary instance is no longer enough for the application’s needs. Some apps need to scale out to improve (or get) unbound storage capacity. Some need to scale out to get more CPUs and load balance writes (in addition to reads). Some may even need both.

Sharding is a simple yet powerful method for distributing a data set across multiple database instances.

With sharding, you split a single primary PostgreSQL instance into multiple smaller instances (i.e. workers, nodes). Each smaller instance is assigned one or more shards, with each shard storing a subset of the entire data set. Effectively, each smaller instance becomes a primary instance that handles both read and write requests, but only for the subset of the data it owns.

With sharding, you split a single primary PostgreSQL instance into multiple smaller instances (i.e. workers, nodes). Each smaller instance is assigned one or more shards, with each shard storing a subset of the entire data set. Effectively, each smaller instance becomes a primary instance that handles both read and write requests, but only for the subset of the data it owns.Sharding With PostgreSQL

You can manually implement sharding or use an existing 3rd party solution to automate the process. One such solution is CitusData, a PostgreSQL extension that simplifies the sharding of PostgreSQL deployments.

The client application connects to the Citus coordinator, which decides which worker node(s) (i.e. PostgreSQL instance) must process a client request.Next, let’s see what needs to be done to improve the high availability of this deployment option.Complete Sample Configuration for High Availability with Multi-Master Deployments with Coordinator

A sample of a highly available configuration for the CitusData deployment can look like this:

Let’s move from left to right of the diagram:

Let’s move from left to right of the diagram:- The client connects to HAProxy, which routes all requests to the primary Citus coordinator. In the case of a coordinator outage, this proxy will forward requests to the coordinator’s standby.

- The Citus primary coordinator is connected to PgBouncer connection poolers that are deployed alongside each worker node (PostgreSQL instance). The Citus coordinator forwards application requests to the worker nodes using connections from of those pools.

- Each primary worker instance has its own standby instances (or replica instances). The changes are replicated from a primary to an associated standby. So for a RPO=0 scenario using synchronous replication, it is crucial to have at least two standbys for each primary. This ensures that the primary can successfully commit changes even if one standby becomes unavailable.

- Patroni agents are deployed alongside primary and standby workers, as well as primary and standby coordinator instances. Patroni monitors the state and healthiness of the cluster and takes care of the failover/failback procedures if there’s an outage.

- Patroni agents use etcd cluster to reach consensus for various operations, including failover and failback.

High Availability for Multi-Master Deployments Without Coordinator

Generally, the fewer components a system has, the more reliable it is. If you need to shard PostgreSQL, but want a simpler solution in terms of high availability, then a multi-master deployment without the coordinator is a suitable option.

In reality, removing the coordinator from existing sharding solutions for PostgreSQL is challenging. The main obstacle is the lack of direct communication among PostgreSQL workers (instances). Each worker is a standalone database server that has no knowledge of any other worker in the deployment.

So what options exist if you want to continue using PostgreSQL but run on a simple scalable and fault-tolerant architecture? A great option is YugabyteDB, a cloud native distributed SQL database built on PostgreSQL source code.

A YugabyteDB cluster consists of several YugabyteDB nodes, each storing a subset of the data, distributed using familiar sharding technique. Each YugabyteDB node consists of query and storage layers.

A YugabyteDB cluster consists of several YugabyteDB nodes, each storing a subset of the data, distributed using familiar sharding technique. Each YugabyteDB node consists of query and storage layers.YugabyteDB’s query layer is the PostgreSQL query layer (source code) with some enhancements to make it possible for the PostgreSQL’s parser/analyzer/planner/executor to execute requests over YugabyteDB’s distributed storage layer—DocDB.

YugabyteDB nodes can (and do) talk to each other, which explains why there is no single coordinator node in the database architecture.

Changes are replicated using the Raft protocol.

There is a raft leader for each shard group. The leader receives the reads and writes for data belonging to the shard. In case of an update, the leader is responsible for the changes replicated to the raft group followers that store redundant copies of the data.A Sample Configuration for High Availability with Multi-Master Deployment without Coordinator

YugabyteDB is built on a shared-nothing architecture, with nodes that communicate with each other. Therefore, it doesn’t require any external components for highly available deployments. YugabyteDB is built with high availability in mind. So, a highly available and scalable architecture with YugabyteDB can be as simple as:

The client application connects to the cluster of nodes using a standard PostgreSQL driver or a YugabyteDB smart driver. The smart driver is aware of all the cluster nodes and can load balance requests starting from the application layer.The nodes deal with potential outages themselves. For instance, if one node goes down, the others will detect the outage. Since the remaining nodes have a redundant and consistent copy of data, they will start immediately processing application requests that were previously sent to the failed node. YugabyteDB provides RPO=0 (no data loss) and RTO within the range of 3-15 seconds (depending on the database and TCP/IP configuration defaults).

Summary

Whether you use a single PostgreSQL database server, or shard data across multiple database instances, you need to ensure that your deployment can withstand various outages and failures.

Know your RPO, RTO, and availability SLAs, and then choose a highly available configuration that will keep your PostgreSQL deployment running—even in the face of the most adverse of incidents.

PostgreSQL High Availability FAQ

- Does PostgreSQL support high availability?

High availability can be achieved or maximized for these PostgreSQL deployment options: single database instance (with or without read replicas) and multi-master (with or without coordinator). - What are the main differences between high availability and disaster recovery?

High availability ensures uninterrupted system operation by eliminating single points of failure, while disaster recovery focuses on restoring normal operations after a failure or outage. - How to make PostgreSQL highly available?

Several PostgreSQL high availability (HA) deployment options exist because there is no one-size-fits-all solution. Choosing the best option depends on your specific use case and requirements. - What are the methods to measure high availability?

High availability is measured by defining and meeting uptime commitments outlined in your SLA. Calculate target uptime by figuring out RPO and RTO.