Improving PostgreSQL: How to Overcome the Tough Challenges with YugabyteDB

Rick Branson’s well-known blog post “10 Things I Hate About PostgreSQL” highlights the key issues people face with PostgreSQL. The problems he highlights have been known for a while and stem from the design of PostgreSQL’s storage and transactions. Efforts to fix them have begun, but making changes is difficult in a database that’s been used for decades to support critical applications. Often, there’s no direct communication between users and the community since PostgreSQL is free and open-source.

The PostgreSQL community consistently adds features and solutions to the upper layer, like SQL language compliance, relational features, and support for advanced data types like JSONB. However, evolving the bottom layer—storage and transaction—is challenging. It still relies on older tech/designs such as Heap tables, B*Tree indexes, full-page WAL, checkpoints, Vacuum, and 32-bit XID. Plus there is a lack of in-place updates. The list goes on and on. While it functions and there are workarounds for problems, the inconveniences persist, as noted in Rick’s “10 Things…” blog post.

YugabyteDB is a distributed SQL database that’s runtime compatible with PostgreSQL and designed with a cloud-native, resilient, and elastically scalable architecture. The modern storage layer design also addresses most of the problems mentioned in the “10 Things…” blog. This article walks through each of the ten problem areas and explains how they are resolved in YugabyteDB.

#1: Disastrous Transaction ID (XID) Wraparound

PostgreSQL uses a 32-bit transaction ID, and the database freezes when it is exhausted. Regular table vacuuming is needed to prevent this. The potential future use of 64-bit integers might resolve this, but for now, it remains an issue.

YugabyteDB employs Hybrid Logical Time (HLC) for cluster synchronization, which includes a physical clock component. Therefore, it will never exhaust. More information can be found in A Matter of Time: Evolving Clock Sync for Distributed Databases.

#2: Data Loss Likely During Failover

Because it is not natively distributed, PostgreSQL lacks ACID across multiple servers. An async replica can be set up to handle reads (with an acceptable lag) and for disaster recovery (with an RTO measured in minutes and an RPO with some data loss). A quorum of sync replicas can lessen the risk of data loss but will increase write latency. This must all be carefully managed to avoid data corruption in case of failover.

YugabyteDB efficiently uses sync replication, sharding the tables and indexes into tablets and replicating them with the RAFT algorithm. It ensures there’s only one “leader” to take the reads and writes for a set of rows or index entries, eliminating split-brain risk. And a “follower” can be elected as a new “leader” within 3 seconds. More information can be found in How Does the Raft Consensus-Based Replication Protocol Work in YugabyteDB?

#3: Inefficient Replication That Spreads Corruption

PostgreSQL streaming replication is based on physical replication through the WAL at page level. I disagree with the Spread Corruption claim since, in this area, WAL streaming replication is generally much safer than storage-based replication. The issue likely stemmed from a non-full-page WAL record corrupting an existing block in the standby.

YugabyteDB’s replication is logical, operating at the key-value level and making it more efficient in terms of the volume transferred—a crucial factor for cross-cloud-region clusters’ costs. It allows each tablet peer to apply changes to its files. YugabyteDB writes sequentially into Sorted Sequence Table (SST) files, eliminating the risk of new changes corrupting previous blocks. Independent compaction on each node detects and repairs block corruption, thanks to checksum checking.

#4: MVCC Garbage Frequently Painful

PostgreSQL writes entire new rows even when updating one byte in a single column, leading to table bloat. This affects performance on index maintenance, especially when using the default 100% fill factor. The result? Frequent vacuuming.

YugabyteDB, on the other hand, stores updates on a per-column basis. The garbage collection of old versions, which are kept for a short time for MVCC purposes, is managed by background compaction of SST files per table/index shard. Since the SST files are immutable, this process doesn’t interfere with writes.

#5: Process-Per-Connection = Pain at Scale

PostgreSQL is not multi-threaded. It forks a new process for each connection, which takes memory. And memory is limited on a server. Especially with double buffering, less available memory means more I/O. While connection pools and Pgbouncer can help, they may not be enough with microservices. Pgbouncer (specifically) adds another component to manage.

YugabyteDB also uses one process per connection, mirroring PostgreSQL’s protocol and query layer. This isn’t typically a scalability problem as connections can be spread across nodes. With YugabyteDB, all nodes are equal, accepting connections with read and write transactions. Ongoing work to add a database resident connection pool—based on Odyssey—aims to address applications without client-side connection pools and microservices with too many connection pools.

Explore how YugabyteDB’s new Connection Manager turns a key Postgres weakness into a strength>>>

#6: Primary Key Index Takes Up A Lot of Space

PostgreSQL stores rows in heap tables, just like Oracle, and the primary key is an additional secondary index. This means that more space and more reads (even with the index-only access) are needed to read the table.

YugabyteDB stores tables in its primary index structure, a log-structured merge-tree (LSM) with the first level in memory. This ensures fast access by primary key, and real index-only access by secondary indexes.

#7: Major Version Upgrades Can Require Downtime

PostgreSQL upgrades require planned downtime. The application must be stopped for the upgrade and subsequent statistic gathering (ANALYZE). The amount of downtime depends on the database size.

Being a distributed database, YugabyteDB permits online upgrades by updating nodes one at a time in a rolling fashion. In an RF=3 deployment, one node can be offline without affecting application availability. Restarting is quick, or a new node can be added and the old one removed once data has been rebalanced.

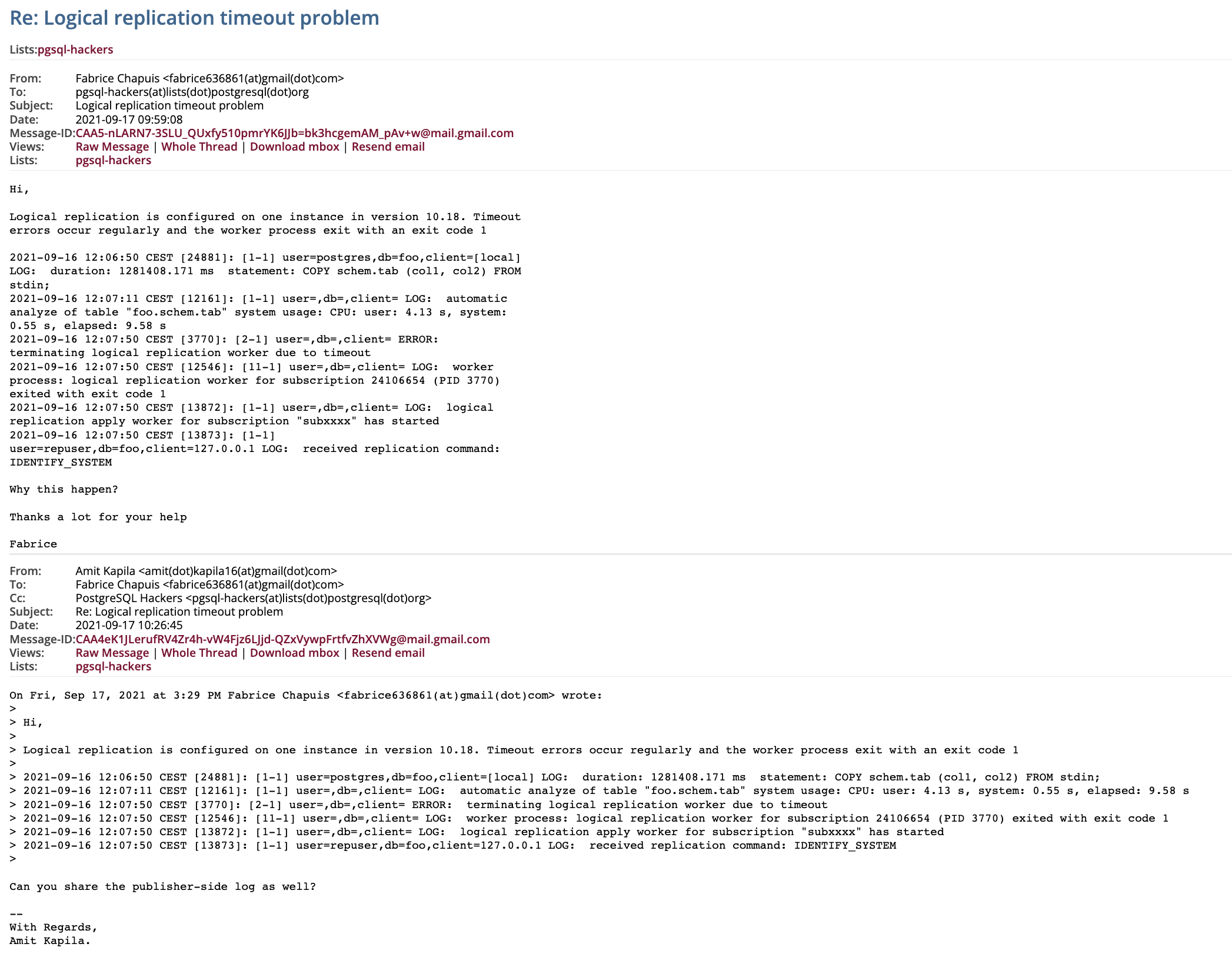

#8: Cumbersome Replication Setup and Troubleshooting Process

Setting up and troubleshooting PostgreSQL replication can be complex, as evidenced by a recent discussion (below). However, this example also highlights the valuable support available from the open-source community.

YugabyteDB is designed for fully automated replication, including sync, async, and change data capture (CDC), all managed at the per-shard Raft group writes level. Enabling sync replication is as easy as setting replication-factor=3 when starting the cluster. And, like PostgreSQL, YugabyteDB is open source and has a supportive and collaborative community.

#9: Ridiculous No-Planner-Hints Dogma

The PostgreSQL core team has historically opposed the use of query planner hints. Even when considered beneficial to give directives to the optimizer—like materializing a CTE or not—they have been implemented in the SQL syntax, which breaks compatibility with previous versions.

YugabyteDB, on the other hand, includes pg_hint_plan by default. While hints shouldn’t be overused, they are available when needed for testing or quick workarounds. This approach is also possible with PostgreSQL, but in IT, where many deployments occur without specific configurations, defaults matter.

#10: No Block Compression

PostgreSQL typically relies on the operating system for file-related tasks, including compression. While some use ZFS for its features, achieving consistent and acceptable database performance on ZFS is challenging.

YugabyteDB provides both compression and encryption—at rest (storage) and in transit (network). These features are essential for a cloud-native database, considering the costs of storage and egress traffic between different areas or regions.

A Word About the Challenges of PostgreSQL Multi-Version Concurrency Control

We’re not alone in discussing PostgreSQL problems. There are many others out there blogging and writing on this subject. A common source of these issues/problems is the PostgreSQL implementation for multi-version concurrency control (MVCC), which aligns with our 4th point above. Some consider PostgreSQL’s MVCC to be particularly problematic due to issues with:

- Version copying

- Table bloat

- Secondary index maintenance

- Vacuum management

Is PostgreSQL Obsolete? No, It’s Evolving.

Despite the challenges mentioned, a piece of often-shared advice is to start with PostgreSQL and then determine if it doesn’t suit your use case. Some of the issues can be mitigated with the use of managed services, while some are widely known and can be managed proactively. The bottom line is that PostgreSQL excels as an open-source database for a broad range of workloads.

However, for OLTP workloads that require scale and high availability, consider YugabyteDB. As a fully open-source solution compatible with PostgreSQL, the YugabyteDB database has embraced and evolved PostgreSQL to provide much-needed support for cloud-native applications. It also offers the flexibility to easily transition to (or from) PostgreSQL as needed. Imagine simplifying the complexities associated with vacuum and replication management with the availability and elastic scalability you need.

The choice, ultimately, will depend on your specific use case. Both PostgreSQL and YugabyteDB have their merits, with PostgreSQL being appreciated for its community, ecosystem, open-source model, and standard SQL compatibility. YugabyteDB’s scalable design builds on these strengths, offering additional improvements such as a batched nested loop and loose index scan, as well as an in-database connection pool. As a Developer Advocate at Yugabyte, I take great pleasure in helping others learn and share in this area. The growing interest in YugabyteDB brings more opportunities for collaboration, growth, and enjoyment.

Making PostgreSQL Work For a Cloud Native World

Need the power of PostgreSQL but with the scalability and resiliency for today’s cloud native era? Discover how to extend and evolve PostgreSQL to meet the demands of modern applications.

Need the power of PostgreSQL but with the scalability and resiliency for today’s cloud native era? Discover how to extend and evolve PostgreSQL to meet the demands of modern applications.