Exploring End-to-End AI Workflows Using n8n and YugabyteDB

This blog explores how to build Agentic AI workflows using tools like n8n, YugabyteDB, and LangChain. Instead of triggering one-off AI tasks, we share how you can create intelligent systems that can understand context, remember past actions, make decisions, and take the next step automatically.

With n8n handling the workflow orchestration, YugabyteDB storing vector embeddings and memory, and LangChain powering LLM-based reasoning and tool use, we show how everything connects to form an AI that acts more like a helpful assistant than a simple script.

Whether you’re automating insights from documents or building chatbots that remember and respond smartly—this blog shows how to bring real-world Agentic AI to life.

What is an AI Workflow?

An AI workflow is an orchestrated sequence of automated tasks that integrates data ingestion, semantic processing, AI-driven inference, and actionable outcomes. Unlike isolated AI models, AI workflows combine multiple components such as:

- Vector embeddings for semantic understanding

- Language models (LLMs) for contextual reasoning

- Databases for storage and retrieval

- Automation platforms for orchestration

Together, they enable you to:

- Extract knowledge from unstructured data (PDFs, text)

- Perform a semantic search with vector similarity

- Generate context-aware responses via LLMs

- Automate user interactions through structured pipelines

This forms the foundation for intelligent applications like chatbots, document Q&A systems, semantic search engines, and AI-driven automation in modern enterprises.

Why AI Workflows Are Gaining Strategic Importance

Historically, extracting specific information from unstructured data—such as PDFs, Word documents, HTML, and plain text—has been challenging.

Unlike traditional relational databases (RDBMS), where structured data can be queried using SQL, unstructured data lacks a straightforward way to search and retrieve precise information. That has now changed with the advent of vectorization.

Documents can now be chunked, converted into vector embeddings, and stored in the database as vectors. Natural language queries can also be vectorized and compared to these embeddings, enabling semantic search, which allows you to find the most relevant information based on meaning rather than exact keywords.

Enterprises are adopting AI workflows to:

- Transform static documents into dynamic knowledge accessible via natural language

- Enable semantic understanding beyond keyword search

- Automate repetitive tasks with AI assistance

- Improve decision-making with context-aware insights

The convergence of Large Language Models (LLMs), vector databases, and low-code orchestration platforms (like n8n ) is revolutionizing the way businesses interact with their data.

AI workflows are no longer experimental—they are mission-critical for customer support, internal knowledge management, compliance automation, and more.

Why YugabyteDB is a Game-Changer for Vector Embeddings

While several dedicated vector databases (Pinecone, Weaviate, Chroma) are available, YugabyteDB pgvector stands out for enterprise use cases due to the following features.

| Capability | YugabyteDB pgvector | Typical Vector Databases |

|---|---|---|

| PostgreSQL Compatibility | Native pgvector extension, standard SQL interface | Custom APIs, steep learning curve |

| Transactional Integrity | Full ACID compliance, ideal for OLTP workloads | Often eventual consistency |

| Unified Data Storage | Combines relational and vector data seamlessly | Requires maintaining a separate relational database |

| Distributed SQL Architecture | Horizontal scalability, geo-partitioning | Scalability depends on provider implementation |

| Enterprise Control and Flexibility | Open-source, deploy anywhere (cloud/on-prem) | SaaS-first, vendor lock-in concerns |

YugabyteDB bridges the gap between vector search and transactional applications, making it the ideal choice for enterprise-grade AI workflows where both relational and semantic data need to coexist.

Key Advantages of this AI Workflow Architecture

- Unified Vector and Relational Data Platform: Simplifies architecture by using YugabyteDB for both vectors and business data.

- Scalable, Distributed, and Resilient: YugabyteDB’s distributed SQL ensures high availability, fault tolerance, and geo-distribution.

- No-Code Orchestration with n8n: Enables rapid workflow design and deployment without complex integrations.

- LLM-Powered Contextual Responses: Combines vector similarity search with OpenAI’s/Anthropic/Google Gemini, etc. for reasoning capabilities.

- Efficient Document Processing Pipeline: Supports large PDF ingestion, chunking, embedding, and retrieval seamlessly.

- Cost Optimization and Open-source Flexibility: Avoids SaaS vector DB pricing; deployable in controlled enterprise environments.

Step-by-Step AI Workflow with n8n + YugabyteDB pgvector

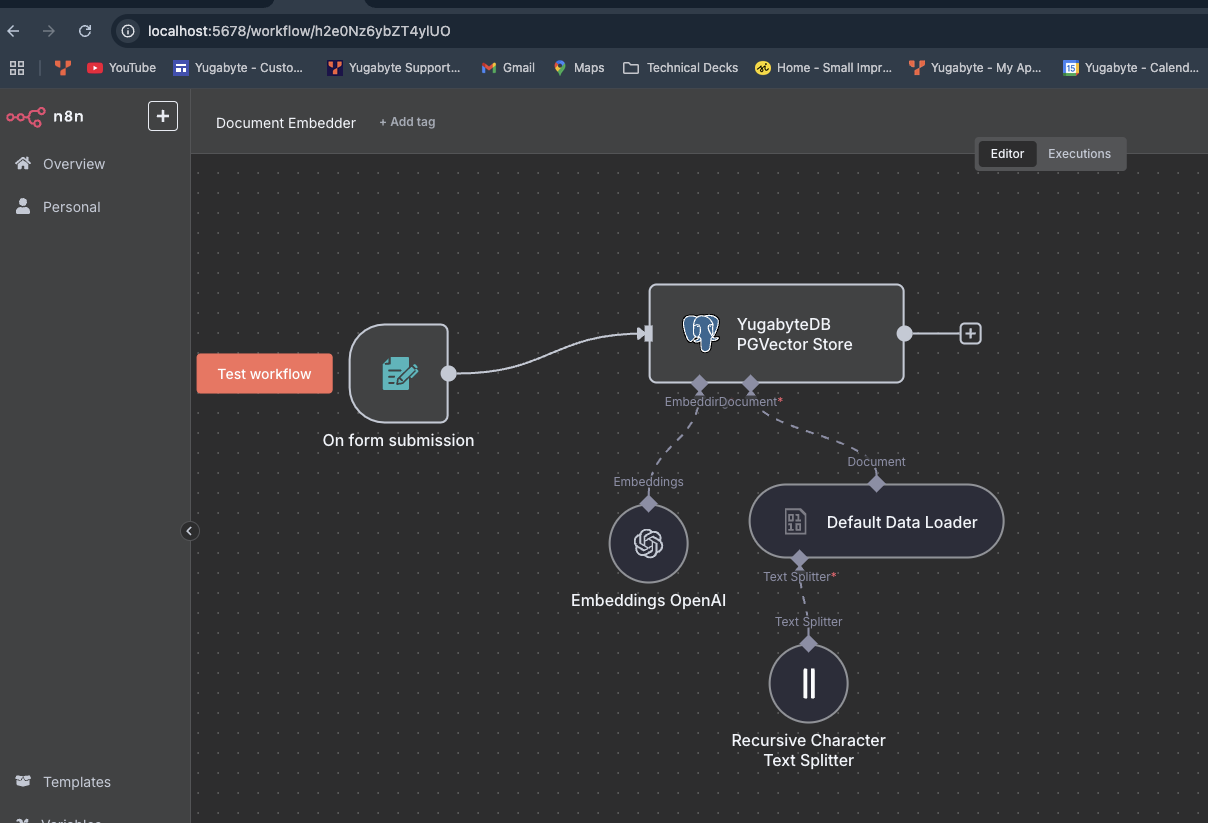

The diagram below shows the n8n workflow designer with its components to integrate the data from free form to YugabyteDB vector store directly using Open AI embeddings.

Document Ingestion and Vector Embedding

The components below are be used in this workflow.

- Form Submission Trigger: Captures uploaded PDF documents or word, or any text documents using the browse/file upload button

- Data Loader and Recursive Character Splitter: Extracts and tokenizes document content into chunks suitable for embedding.

- OpenAI Embeddings Node: Generates high-dimensional vectors representing semantic content.

YugabyteDB pgvector store: Persists vectors in a vector-enabled YugabyteDB table alongside metadata for efficient retrieval. The below schema definition shows a n8n_vector_file table, which stores the embeddings.

yugabyte=# \d

List of relations

Schema | Name | Type | Owner

public | n8n_vector_file | table | yugabyte

(1 rows)

yugabyte=# \d n8n_vector_file

Table "public.n8n_vector_file"

Column | Type | Collation | Nullable | Default

-----------+--------+-----------+----------+--------------------

id | uuid | | not null | uuid_generate_v4()

text | text | | |

metadata | jsonb | | |

embedding | vector | | |

Indexes:

"n8n_vector_file_pkey" PRIMARY KEY, lsm (id ASC)

yugabyte=# select count(1) from n8n_vector_file ;

count

-------

11

(1 row)

yugabyte=#

Interactive Q&A with Vector Search and LLM

n8n is an open-source workflow automation tool that lets you visually build and automate tasks across 300+ apps. It supports self-hosting, custom logic, API integrations, and data workflows with minimal code.

Using the n8n workflow designer, we can integrate various components of AI using your local n8n setup or n8n cloud.

Create your first workflow:

- Add a Webhook trigger

- Add a Slack, Email, or PostgreSQL node

- Connect nodes → Execute

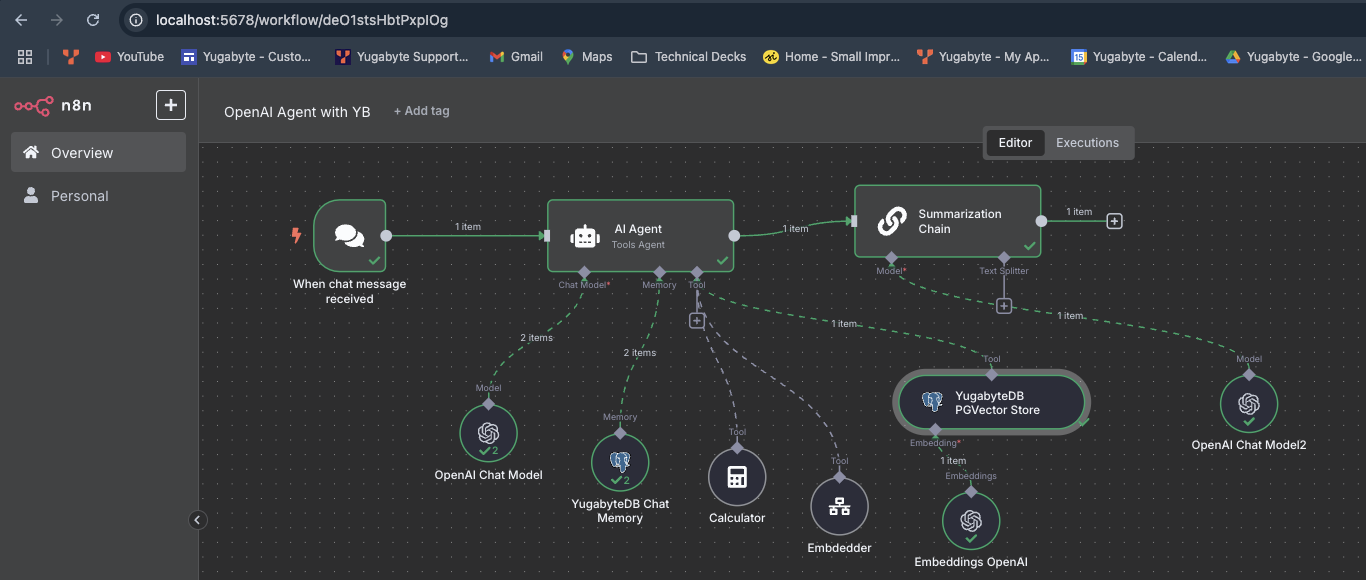

The diagram below shows the n8n workflow designer with its components to interact with a chat component (Q&A or search). This will connect with an AI agent and pull the data from memory or embeddings loaded in a YugabyteDB table using an Open AI model.

n8n allows importing and exporting of workflows, which can be saved as JSON files. These JSON files can be imported back into n8n or shared with others. Workflows can also be exported to a file or copied to the clipboard

AI Agent Workflow with YugabyteDB – Brief Overview

This workflow showcases an end-to-end AI automation built using n8n, combining conversational intelligence with semantic search and memory. When a user sends a message, it triggers an AI Agent powered by OpenAI’s chat model. The agent uses tools like:

- YugabyteDB Chat Memory to recall past interactions,

- YugabyteDB PGVector Store for semantic search via vector embeddings,

- Summarization Chain to return concise, context-aware responses.

The system integrates vector embeddings (via OpenAI) and a retrieval mechanism to deliver accurate, memory-enhanced, and semantically enriched replies — making it a practical example of an Agentic AI workflow using LLMs and database intelligence.

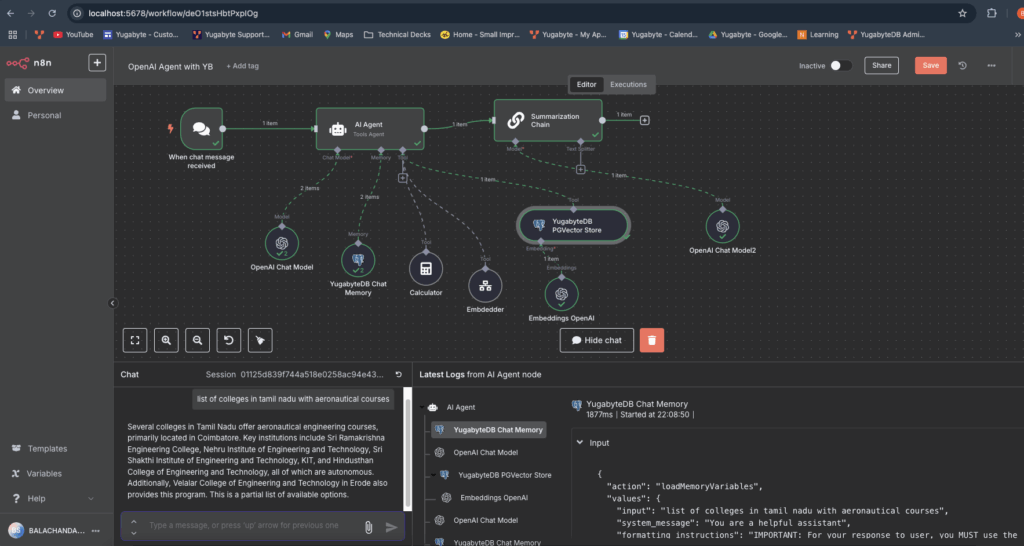

This snapshot captures a live execution of an Agentic AI workflow built in n8n, demonstrating seamless integration between OpenAI’s LLM, YugabyteDB withpgvector, and a memory-augmented toolchain.

Upon receiving the user’s query “list of colleges in Tamil Nadu with aeronautical courses”, the AI Agent dynamically:

- Retrieves past chat context from YugabyteDB Chat Memory,

- Generates embeddings using OpenAI,

- Queries the PGVector Store for semantically relevant documents,

- Returns a clear, summarized answer using the Summarization Chain.

The embedded chat window below showcases how the agent loads memory variables and formulates a contextual response. This workflow is a practical example of how LLMs can use memory, tools, and semantic retrieval in real-time to provide intelligent and relevant answers.

The following components are used in the n8n AI workflow (above)

- Chat Message Trigger: Captures user queries from a chatbot interface.

- AI Agent Node: Orchestrates tool usage—vector search, memory recall, LLM inference.

- Embedder and pgvector Store Search: Dynamically embeds user query and performs similarity search against stored document vectors.

- Summarization Chain: Enhances search results with context-aware summarization using OpenAI.

- Response Generation: Delivers a consolidated, human-like answer back to the user.

AI – Workflow Implementation Highlights

- YugabyteDB pgvector Extension: Enables efficient vector operations.

- Follow the steps to install YugabyteDB 2.25 on your operating system YugabyteDB pgvector

- Follow these steps to configure PG Vector extensions.

https://docs.yugabyte.com/preview/explore/ysql-language-features/pg-extensions/extension-pgvector/

- n8n Workflow Design: Visual, drag-and-drop orchestration of embedding, retrieval, summarization.

- To install n8n on either your self-hosted or n8n managed cloud, follow these detailed instructions –https://docs.n8n.io/choose-n8n/

- Self-hosted – https://docs.n8n.io/hosting/

- Managed n8n cloud: https://docs.n8n.io/manage-cloud/overview/

- To install n8n on either your self-hosted or n8n managed cloud, follow these detailed instructions –https://docs.n8n.io/choose-n8n/

Key Business Use Cases:

Enterprise Knowledge Retrieval

Empower employees with the ability to query internal documents effortlessly using natural language. This significantly enhances productivity by minimizing the time spent searching through static files, manuals, and knowledge repositories.

Contract and Document Question Answering (Q&A)

Enable interactive Q&A capabilities on legal contracts, technical manuals, and standard operating procedures (SOPs). This improves operational efficiency in legal, compliance, and business teams by providing quick, contextually accurate responses from internal documents.

AI-Powered Customer Support Assistants

Deploy intelligent virtual assistants that provide instant, context-aware responses to customer queries. This reduces the workload on human support teams while significantly improving customer experience and response times.

Contextual Search Across Structured & Unstructured Data

Facilitate advanced search capabilities that combine metadata filters (e.g., customer ID, product type) with semantic vector-based search. Ideal for CRM systems, product catalogs, and enterprise knowledge bases where both relational and unstructured data coexist.

Conclusion

By seamlessly integrating YugabyteDB’s vector embedding capabilities, OpenAI models, and n8n workflow orchestration, businesses can develop robust and scalable AI solutions that deliver real value. This unified approach:

- Simplifies architecture by eliminating the need for fragmented, standalone vector databases.

- Scales effortlessly with YugabyteDB’s distributed SQL architecture, ensuring high availability and performance.

- Democratizes AI-powered search and question-answering through intuitive low-code orchestration with n8n.

This is far more than a technical enhancement — it represents a strategic enabler for enterprise-wide AI transformation, bridging the gap between data and actionable intelligence.

Want to know more about vector search? Check out this recent blog which shares how YugabyteDB integrates a distributed vector indexing engine powered by USearch to deliver a fast, scalable, and resilient vector search natively with a Postgres-compatible SQL interface.