Envoy and Service Meshes for Databases: What the Future Holds

At the Distributed SQL Summit 2020, Christoph Pakulski, software engineer at Tetrate, and Prasad Radhakrishnan, VP of data engineering at Yugabyte presented the talk “Envoy and Service Meshes for Databases: What the Future Holds”. In the talk, they explored the topics of Envoy, service meshes–specifically Istio, and how they intersect with the database world. Christoph explained the Envoy PostgreSQL network filter extension released earlier in the year, and showed it in action out-of-the-box with YugabyteDB, a PostgreSQL-compatible database. The talk ended with a look into future enhancements to further streamline app dev with Envoy and YugabyteDB.

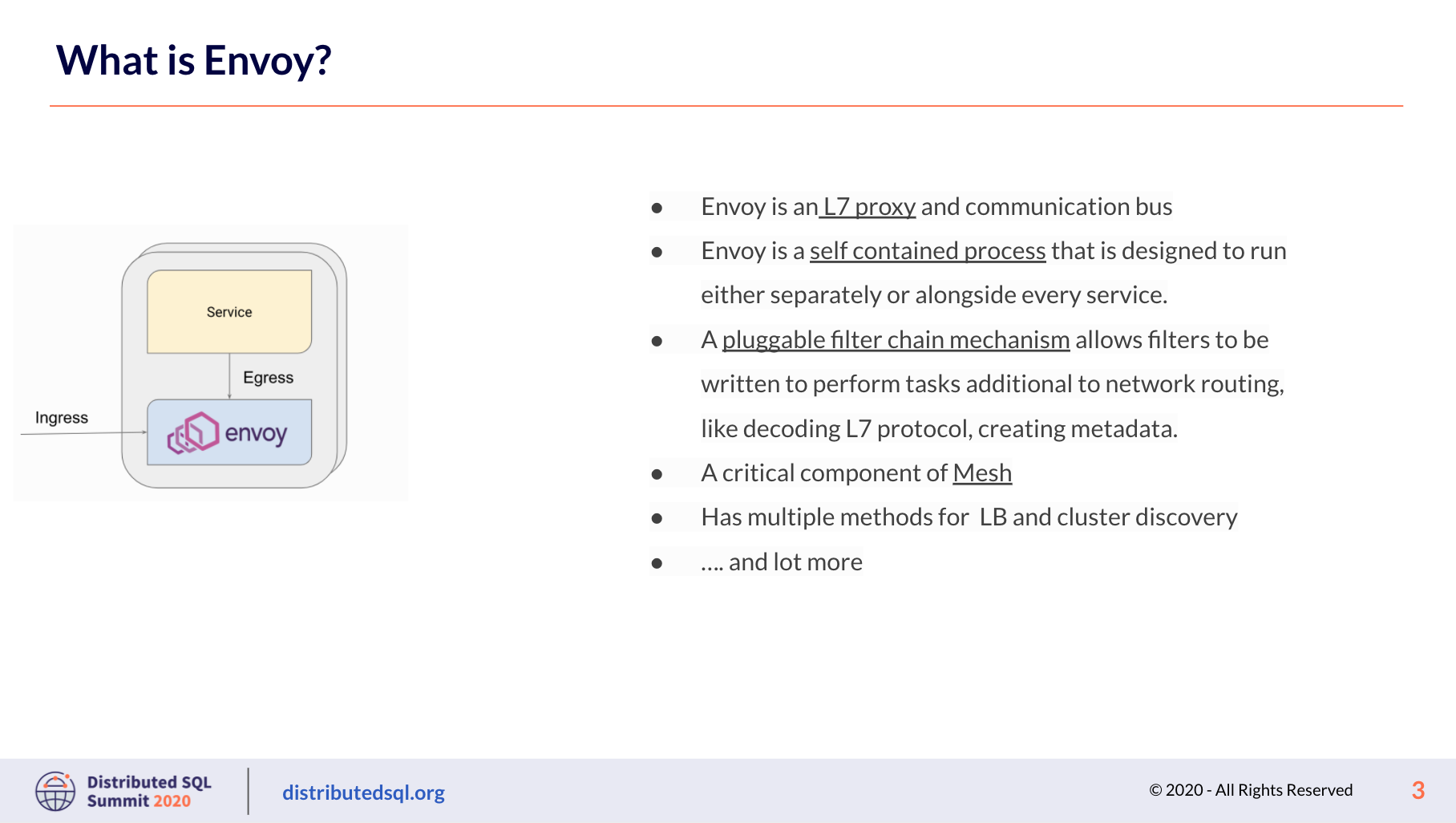

What is Envoy?

Christoph explains that Envoy empowers your application and application developers to concentrate on business logic, instead of network delivery, which Envoy handles for you.

Envoy is a layer 7 proxy. Layer 7 is basically the application layer, so Envoy has to understand your application. This means that Envoy Proxy has to understand what packets are being carried.

There are a few ways to deploy Envoy in concert with your application, including these two important ways: 1) your app talks to Envoy via IP (like a front end load balancer) and 2) you deploy Envoy as a sidecar, where Envoy intercepts all the traffic to your application, before it even reaches the application.

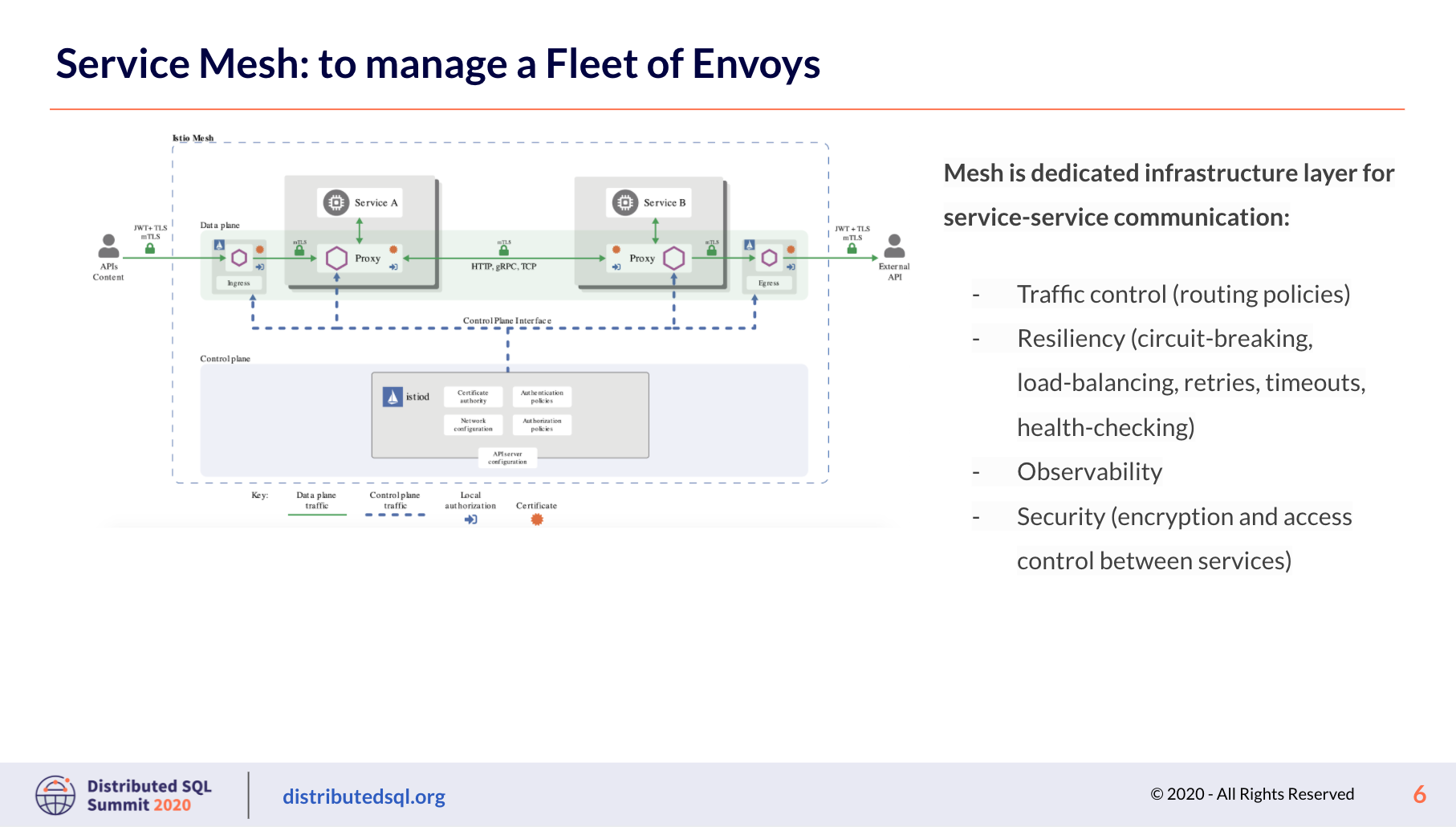

Where do service meshes come into the picture?

Usually you turn to a service mesh when you decompose your application into smaller pieces called services or microservices, which are small applications that provide very limited functionality, and the services need to talk to each other. Nothing stops you from programming communication mechanisms into the application itself, but you can get significant efficiencies and benefits by deploying a service mesh. What’s a service mesh? It is a dedicated infrastructure layer for managing service-to-service communication. You can program application networking logic in one place, instead of into each service or each Envoy attached to a service that you have deployed. Especially when your Envoys are deployed in a sidecar model, with an Envoy instance attached to each service, a mesh like Istio brings you a single control plane to control the entire fleet of Envoy proxies, simply and efficiently.

What if you have multiple meshes, and/or a mix of services running on cloud infrastructure and others on VMs? You can take a look at Tetrate Service Bridge (TSB), which brings all the services of your organization – no matter the workload or environment – into a single unified control plane.

Envoy filters and the Postgres filter for Envoy

Christoph next introduces us to Envoy filters and specifically the Postgres filter for Envoy Proxy. As we see on the slide presentation, an Envoy filter is: “a module in the connection or request processing pipeline providing some aspect of request handling. An analogy from Unix is the composition of small utilities (filters) with Unix pipes (filter chains).” Christoph provides additional detail, a “good example is looking into the body of the message, and that’s what the filter is. Basically is that extra operation, which is on top of just the delivery.” There are listener, network, and HTTP filters.

Diving into the Postgres network filter, Christoph explains that part of the filter’s job is to really understand Postgres messages. Envoy knows if the request is a SELECT from a specific table, for example. And when the reply comes, Envoy knows whether it was a success or not, or if the request resulted in an error.

The Postgres filter can perform two operations. The first is producing runtime statistics, such as the number of messages, inserts, updates, errors, and commits. The second is producing metadata. Christoph further explains, “Metadata is kind of extra information, which was attached to this Postgres message … Metadata would be if you do a select star from something, metadata would be that this packet contains just the read operation from a specific database [or] from a specific table. If it was a write operation, a kind of insert, it would tell you that this packet carries write operations towards a specific table and a specific database.” An example of how you can use the metadata is to “program the next filter in line, [for example] the access control filter saying ‘I do not allow any writes into a database’ and [the packet] will immediately stop and never even reach the database.” You can read more about using Envoy Proxy’s PostgreSQL and TCP Filters to collect Yugabyte SQL statistics here.

What is YugabyteDB?

In the second half of the presentation, Prasad walks us through YugabyteDB, a distributed SQL database with a Google Spanner-like architecture brought to life and open source. Looking at the architecture, at the core of YugabyteDB is a distributed document store, called DocDB, which also includes a distributed transaction manager. And then at the query layer we have a SQL compatible interface (YSQL) and a NoSQL interface (YCQL). So in one database you have multiple APIs, and the YSQL interface is Postgres compatible.

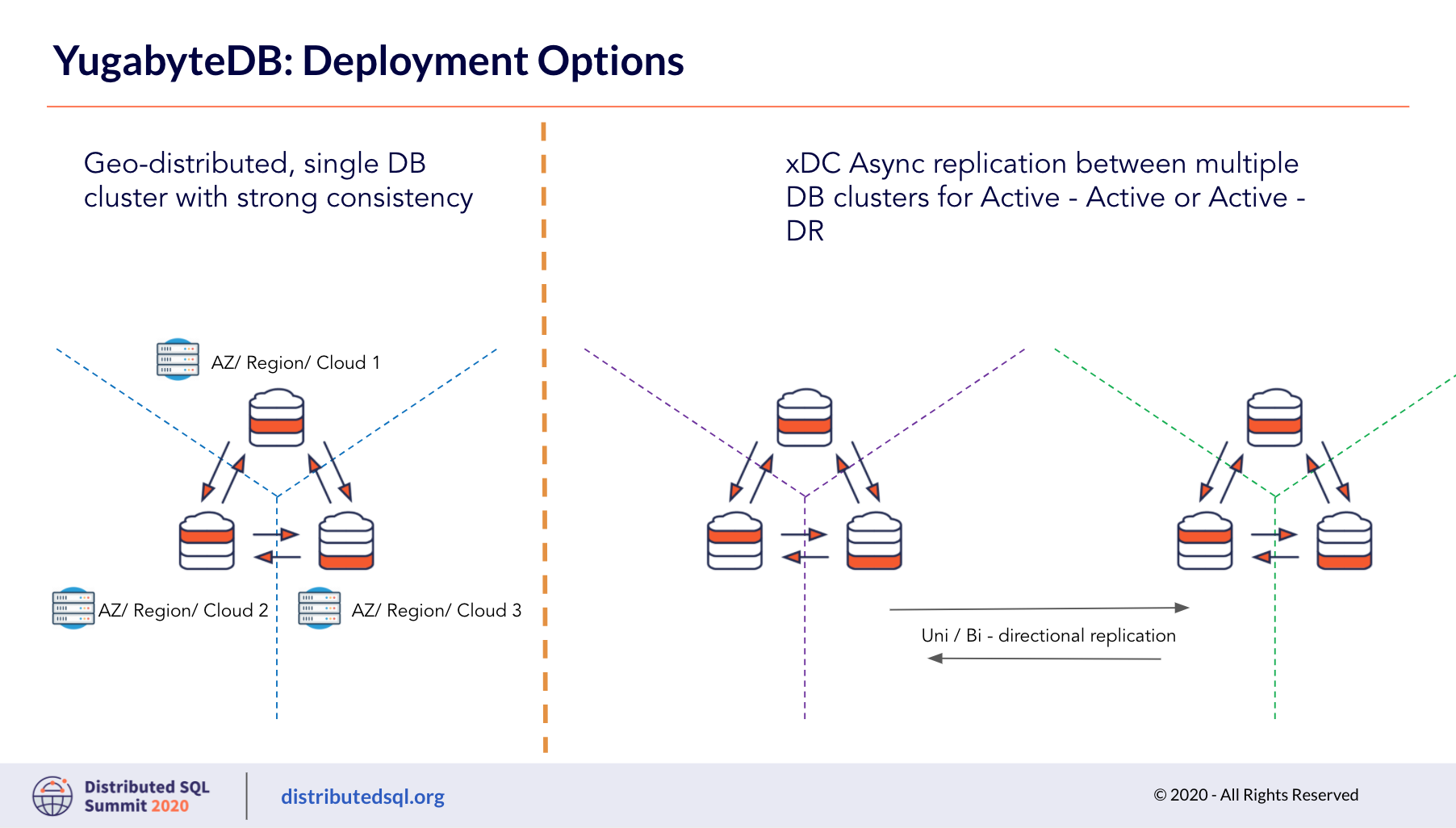

Prasad explains the YugabyteDB deployment options, including the default installation across failure domains of your choice, like AZs, regions, cloud provider, or in a hybrid cloud topology. In this case, you would configure the load balancers to send traffic to the AZs in order of preference.

In another deployment option, let’s call it an active/DR cluster, you want the active cluster serving all traffic; and if something should happen to the active cluster, then you want the traffic to switch over to the DR cluster. In this scenario, you would have set up asynchronous replication between the clusters and data would have been replicating to the DR cluster. If the active cluster goes down, you can modify the driver, load balancer, and/or DNS so the app connects to the DR cluster that is still alive.

Demo of Envoy and Yugabyte

Finally, Christoph demonstrates that Envoy understands what happens between the client and the YugabyteDB nodes running in the cloud. He configured the listener and the filter chains, including setting the Postgres proxy filter, because the demo will use YugabyteDB’s Postgres compatible API, and as a delivery method, Christoph used a TCP proxy filter.

Christoph starts Envoy with trace logging, so we can see what is going on. In the first scenario, Christoph uses a locally available binary of Envoy and configures it. He uses a PSQL client and disabled SSL, and then passes SQL queries, about which the Postgres filter provides statistics at the Envoy level. With the Postgres filter, you can export the statistics, you can aggregate statistics across your fleet of Envoys, and all of this happens at the Envoy level, not the application or database. Christoph shows a second way to get the statistics from the Postgres filter, using GetEnvoy, to simplify the process.

The Postgres filter for Envoy was released earlier this year. Before concluding the presentation, Prasad and Christoph brainstormed potential areas of continued improvement, including some exciting features under active development.

Want to See More?

Check out all the talks from this year’s Distributed SQL Summit including Pinterest, Mastercard, Comcast, Kroger, and more on our Vimeo channel.