Database Version Control with Flyway for YugabyteDB xCluster Setup

In the software development world, source code versioning is widely discussed and companies of all sizes are starting to use code versioning tools like Git. But, what about your data layer? How do you keep your data and schemas in sync with the application layer?

In this blog, I will be discussing Flyway, a solution that helps developers better handle the version control of database schemas, and how you can leverage YugabyteDB xCluster replication and Flyway to handle data version control across multiple data centers.

Flyway overview

Flyway’s official documentation describes their tool as an open-source database migration tool that strongly favors simplicity and convention over configuration and is designed to facilitate Continuous Integration (CI) processes for any database on any platform.

Flyway uses the term “migrations” to refer to all Data Definition Language (DDL) and Data Manipulation Language (DML) changes made to the database. It operates by verifying the current database version and implementing these new migrations (as listed in the migration directory) before the application is launched.

To modify a database schema or make changes to the data, a developer will put all the database changes (DDL or DML) in a SQL script that follows a certain naming convention, which Flyway reads from a designated directory. In the context of Java Spring Boot this directory is usually src/main/resources/db/migration. As a result, all the DDL and DML changes are captured and versioned.

Flyway’s naming convention consists of:

Prefix: “V” for versioned (configurable), “U” for undo (configurable) and “R” for repeatable migrations (configurable)

Version: Version with dots or underscores separate as many parts as you like (not to be used for repeatable migrations)

Separator: “__” which is two underscores (configurable)

Description: Underscores or spaces separate the words

Suffix: .sql (configurable)

For example, the following Flyway scripts are used in YugabyteDB’s test. To make things easy, I am putting the date in the description portion.The description, however, should be something meaningful.

V1__2020_03_11.sql V1__2022_02_20.sql V1__2023_02_07.sql

YugabyteDB xCluster Replication

Nowadays, it’s common for users or enterprises to employ multiple data centers to ensure high availability (HA) for applications and databases. By default, YugabyteDB provides synchronous replication and strong consistency, even across geo-distributed data centers. However, not all scenarios require synchronous replication or can’t justify the additional complexity and operational costs associated with managing three or more data centers. To meet these needs, YugabyteDB supports multi-region deployments that utilize cross-cluster (xCluster) replication built on top of asynchronous WAL replication in DocDB.

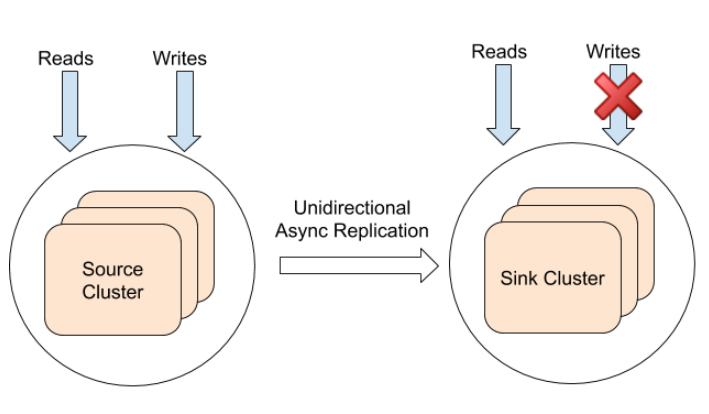

YugabyteDB xCluster Deployment: Active-Standby

Active-passive is one of the most widely adopted HA architectures. Replication in this type of deployment is unidirectional: from a source (known as the producer cluster) to a sink (known as the consumer cluster).

The sink clusters are often situated in data centers or regions distinct from the source cluster. They’re classified as standby since they don’t accept writes from the higher layer services. These are usually utilized to serve low-latency reads from the sink clusters and for disaster recovery purposes.

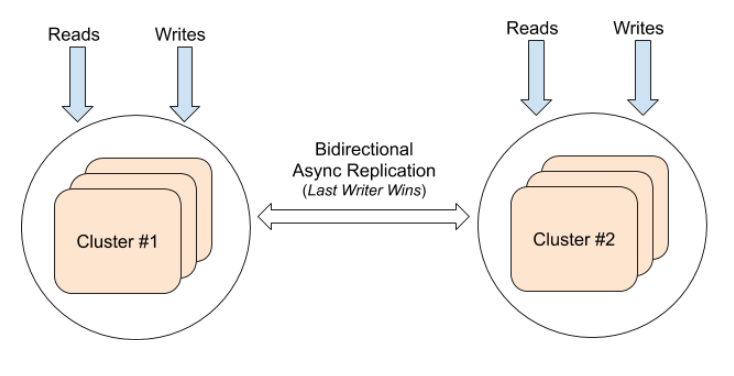

YugabyteDB xCluster Deployment: Active-Active

A more advanced topology is active-active, where both sides can perform reads and writes. This multi-writer deployment is built internally using two source-sink unidirectional replication streams as a building block. Timestamps are meticulously assigned to ensure the last writer wins semantics, and data from the replication stream is prevented from being re-replicated.

Flyway with YugabyteDB xCluster

Flyway enables version control for all database changes. However, do you know how you can maintain HA for databases while controlling versions, particularly in a multi-master deployment?

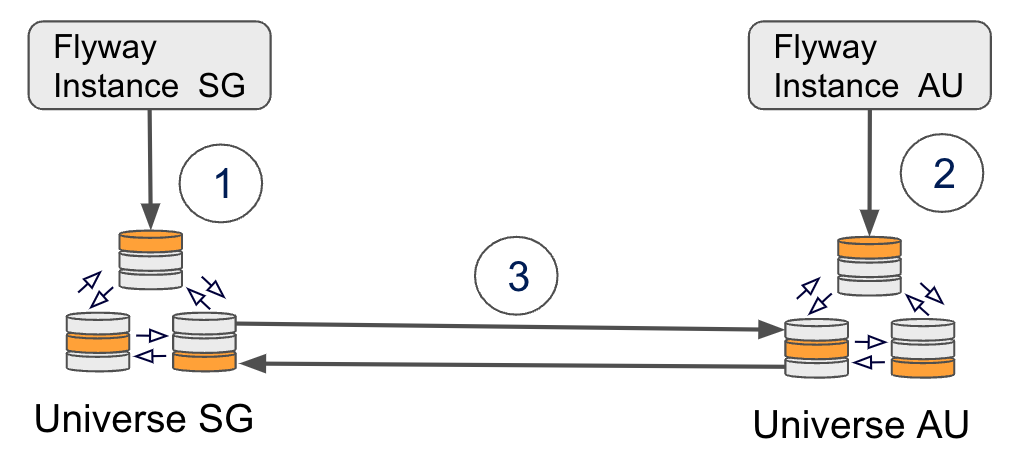

Let’s walk through an example where we test YugabyteDB xCluster while controlling database versions. We can set up two Flyway instances pointing at their respective YugabyteDB universes and validate data consistency on top of xCluster replication. Database changes in Flyway migrations, including both DML and DDL, are included.

Preparing for the Test

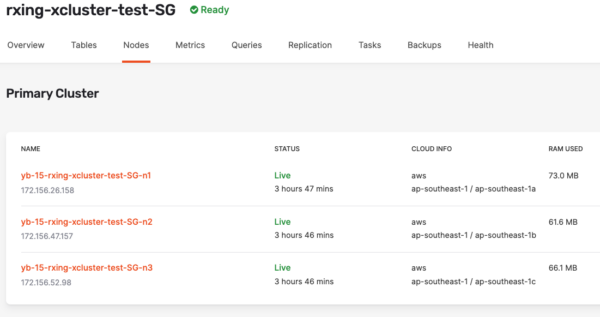

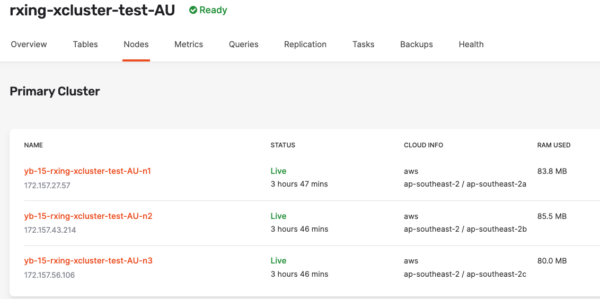

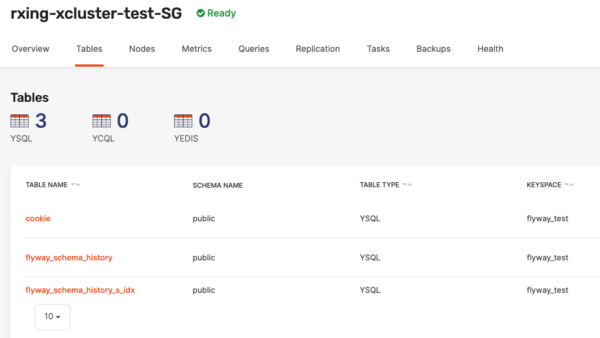

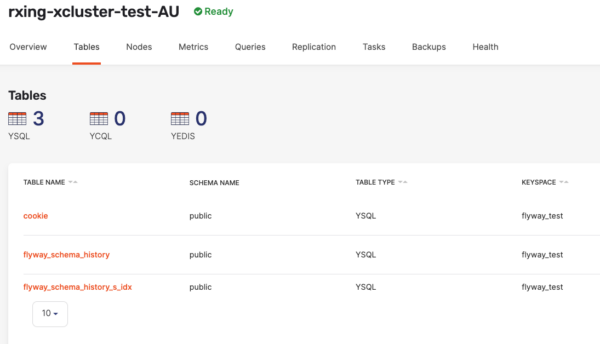

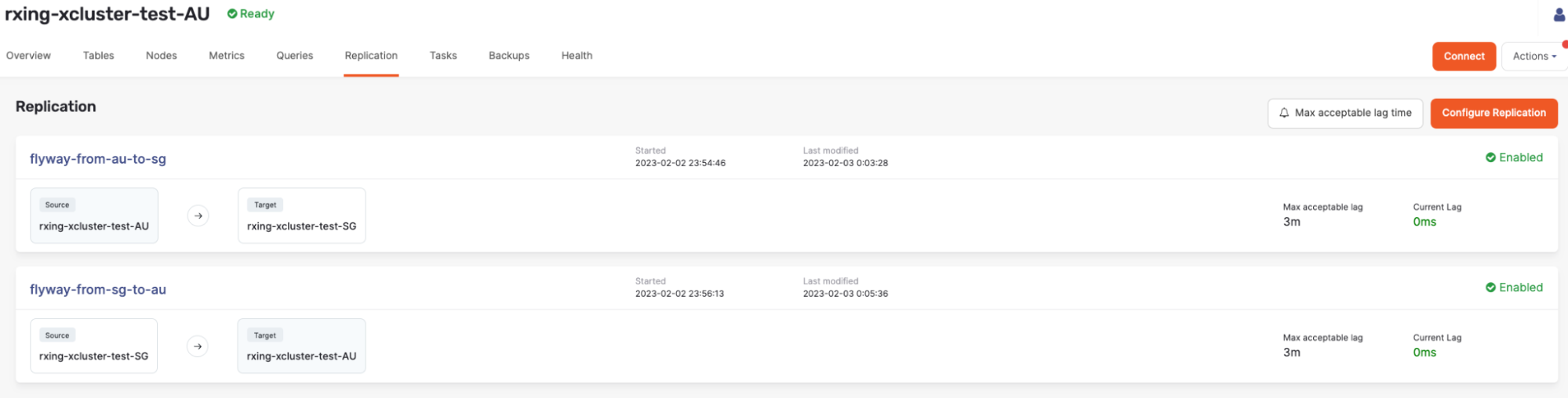

We provisioned two universes, each with three database nodes to guarantee availability zone (AZ) level fault tolerance. One universe is located in Singapore, and the other is in Australia. Below is the initial status of the clusters.

Now, let’s create the same database on both sides and set up the bidirectional xCluster replication across the Singapore and Australia universes.

yugabyte=# create database flyway_test; CREATE DATABASE

Flyway Instances Setup

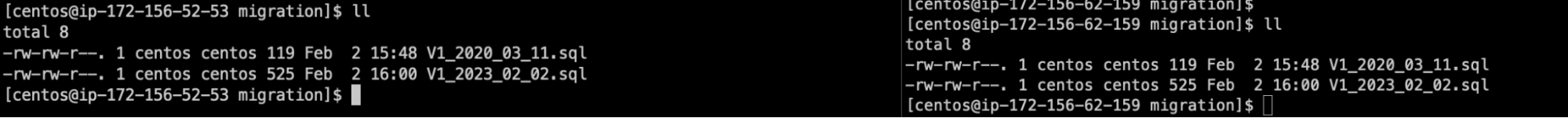

The testing was built on top of a Github project based on Java Spring Boot. Under src/main/resources/db/migration, we created a sql file with version numbers, e.g. V1_2020_03_11.sql. Below is the DDL in the sql file.

create table cookie (

id bigserial not null,

flavour varchar(255),

primary key (id)

)

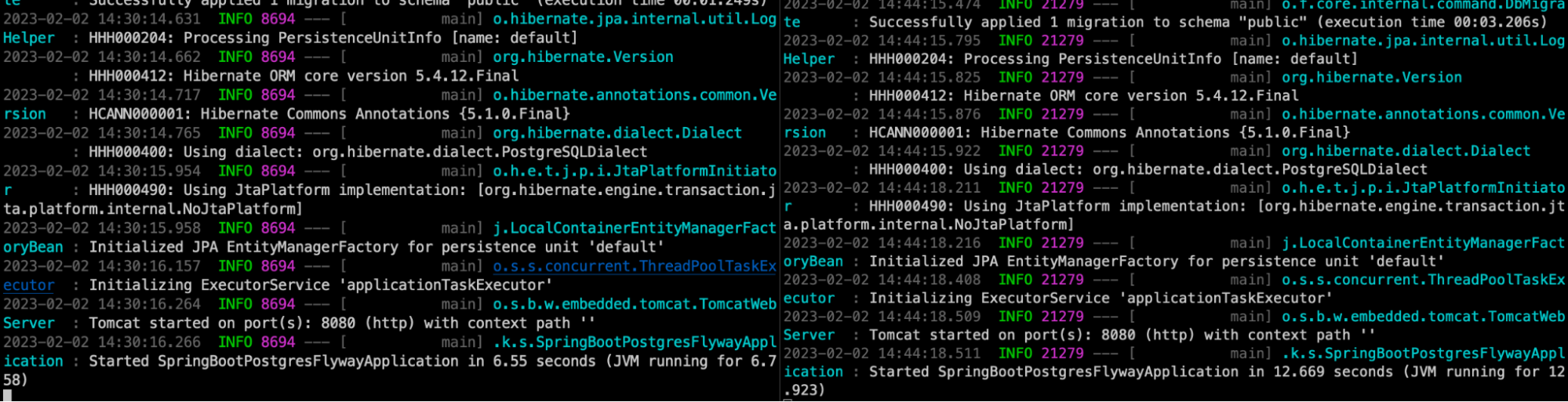

After that, we started two Flyway instances pointing to two universes separately.

Here we verified that the tables were created in both universes.

Active-Active Replication Setup

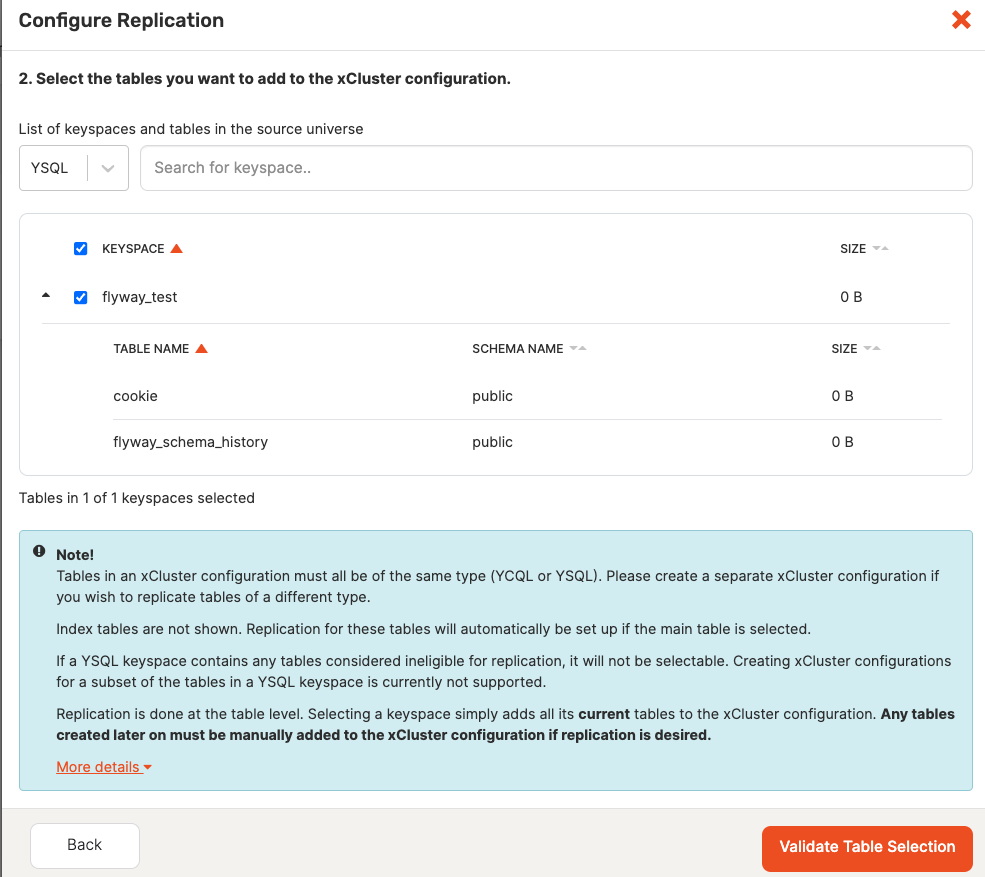

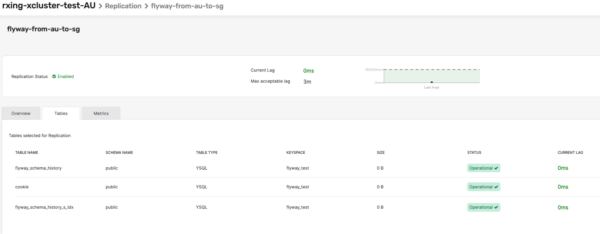

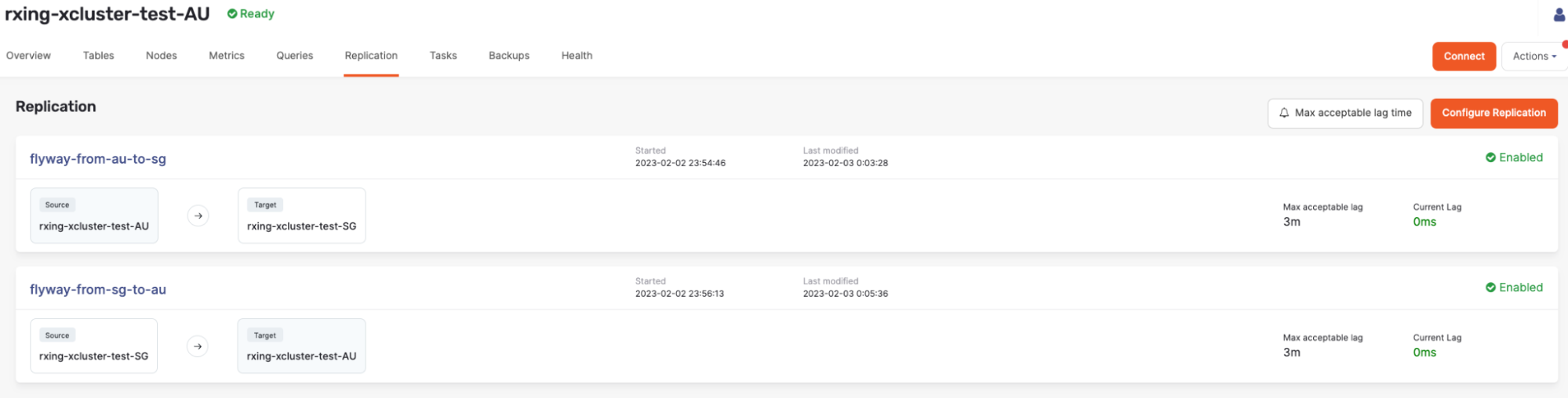

Now, let’s set up active-active xCluster replication through the YugabyteDB Anywhere UI.

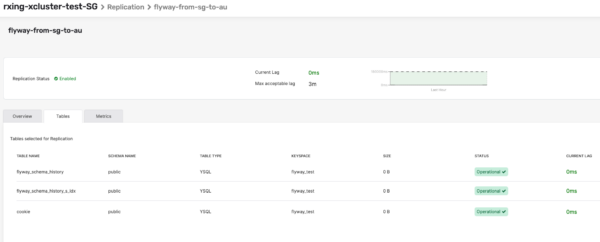

Then verify the bi-directional xCluster replication.

New Migrations (DMLs) Setup

Stop the Flyway instances while maintaining the active-active replication.

Add the new DML script into Flyway migrations for both instances. The new DML script inserts 10 new records to the table that was created by the first migration.

insert into cookie values (1, 'Chocolate Chip Cookies'); insert into cookie values (2, 'Shortbread Cookies'); insert into cookie values (3, 'Macaron Cookies'); insert into cookie values (4, 'Macaroon Cookies'); insert into cookie values (5, 'Sugar Cookies'); insert into cookie values (6, 'Oatmeal Raisin Cookies'); insert into cookie values (7, 'Gingerbread Cookies'); insert into cookie values (8, 'Snickerdoodle Cookies'); insert into cookie values (9, 'Fortune Cookies'); insert into cookie values (10, 'Fried cookies');

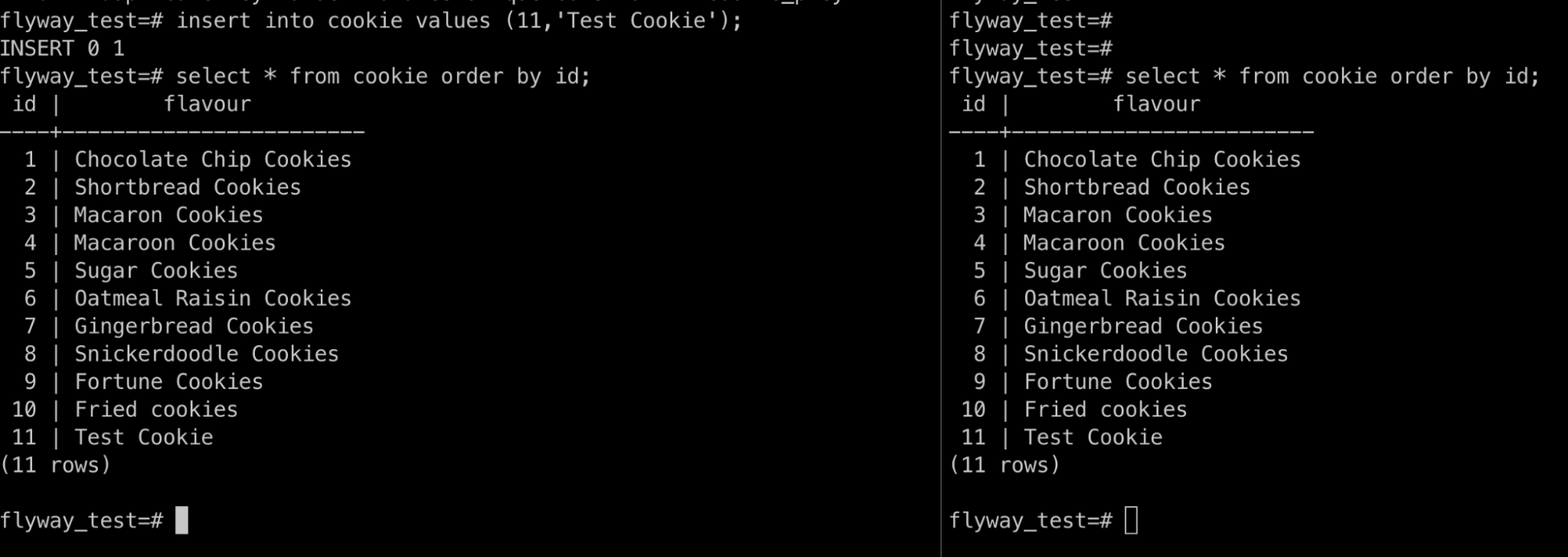

Restart Flyway Instances and Verify the Data

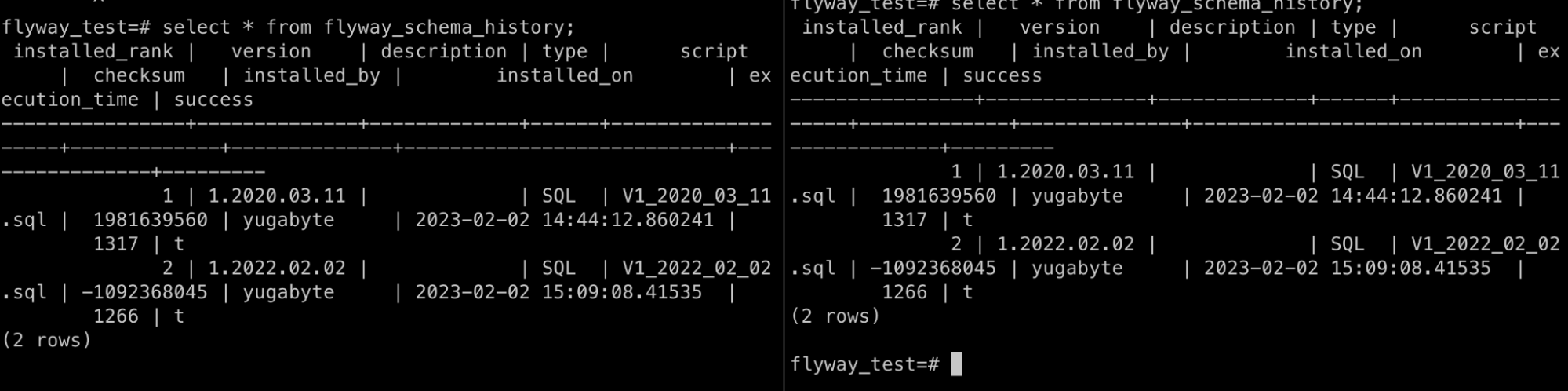

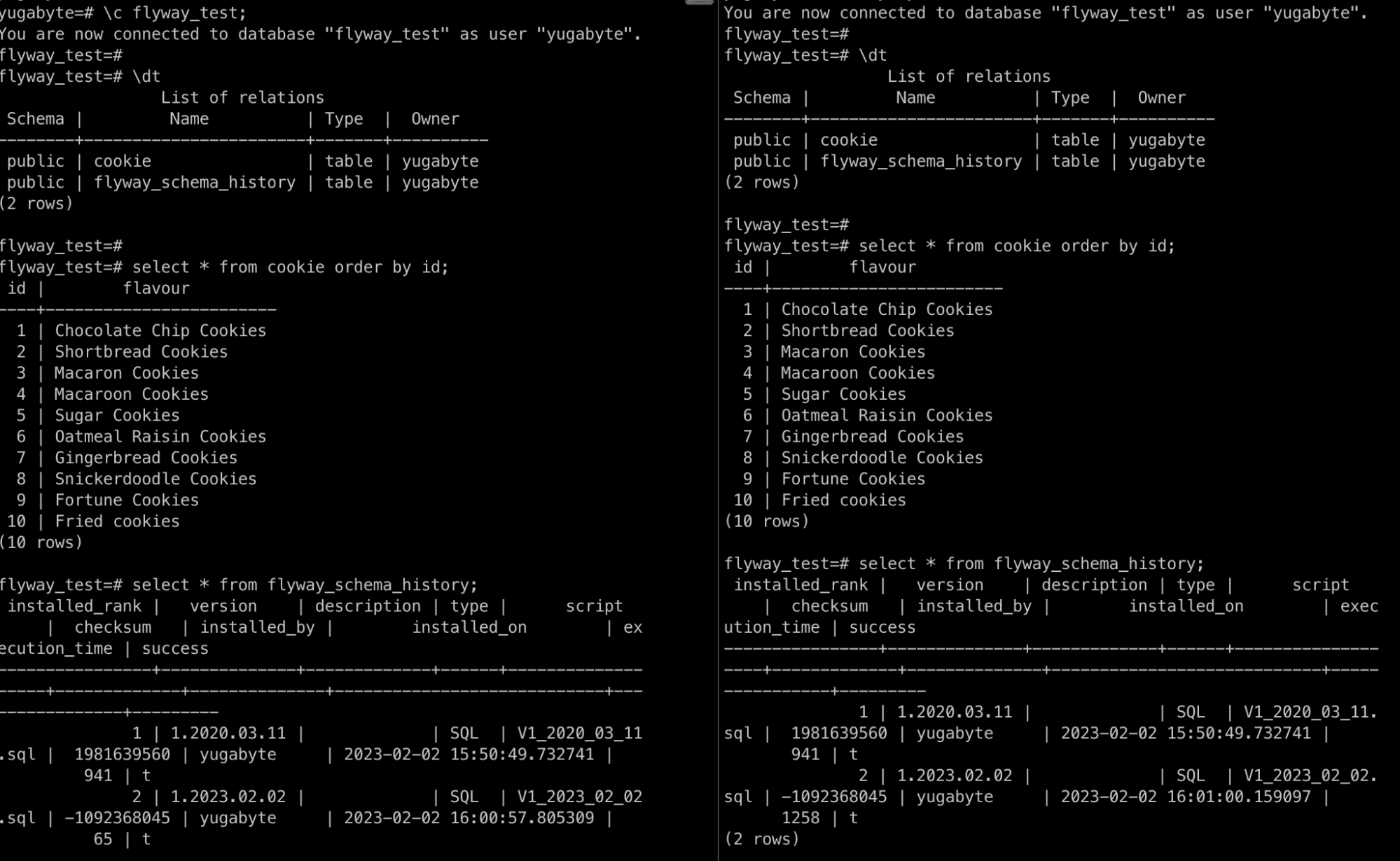

Restart the Flyway instances that point to the two universes separately. Then verify the data flow from the execution time.

Based on the data flow (shown below), there will be two scenarios.

- If the xCluster replication happens before the second Flyway instance starts, the data flow will be 1-3-2. In this case, the Flyway AU instance will skip the migration since the record exists in the flyway_schema_history table.Additionally, the execution time in flyway_schema_history will be the same. (verification shown in figure 15)

Figure 15. Data Verification For Data Flow 1-3-2 - If the xCluster replication happens after the second Flyway instance started, the data flow will be 1-2-3. Both Flyway instances will execute the migration independently; therefore, the execution time in flyway_schema_history will be different in the two universes. (Verification shown in figure 16)

Figure 16. Data Verification For Data Flow 1-2-3

Verification of Replication Status

As you can see, the replications were not broken—in both scenarios.

Now, insert a new record to validate the replication was functioning well.

Best Practices

During our test, we didn’t encounter any issues while maintaining xCluster replication. However, in rare cases for DML migrations, failure may occur if replication from Universe SG to Universe AU for the table cookie occurs before the replication of table flyway_schema_history. As a result, the migration script on Flyway instance 2 will fail due to duplicate keys. To prevent this, simply add “on conflict do nothing” for the insert statements to avoid conflict issues.

YugabyteDB xCluster replication does not automatically replicate DDL changes. This means that in the event of a DDL migration (where the records of flyway_schema_history are replicated from one universe to the other), the second flyway instance treats the DDL script as an already executed script, and the actual DDL changes are not applied due to current limitations. To overcome this, the xCluster replication must be paused before performing the DDL migration.

Because of the limitations listed above, it is safe to pause xCluster replication during migration and let Flyway handle it. However, the limitations will be addressed in the future as YugabyteDB xCluster plans to support DDL replication and transactional replication, which are already in the product roadmap.

Conclusion

YugabyteDB works with Flyway migrations to handle database version control. Cross-region active-active YugabyteDB replication can be set up without complicated configurations, and each region will maintain the database version control by using dedicated Flyway instances. Data changes i.e.DMLs, DDLs can be easily propagated to the other regions with minimal manual work on the replication setup. Future produce enhancements will automate this manual work.