Multi-Cloud Distributed SQL: Avoiding Region Failure with YugabyteDB

As Amazon CTO Werner Vogels often reminds us: ”Everything fails, all the time”. This means any component of the infrastructure will fail at some point. However, with more and more software being deployed in the cloud, today’s infrastructure must provide redundancy. In addition, the applications that sit on top of it must provide continuity over failures.

This involves database replication when the failure concerns data in motion. But there are different levels of failures and different protection technologies. In short, increasing the physical distance between the replicas grants the highest protection. When it comes to database replication, a longer distance increases the latency, at least for writes.

Database replication in AWS and other cloud providers functions as follows:

- Synchronous replication occurs across availability zones (AZs) within the same region. AZs are distant enough to ensure resilience to fire, flood, and power outages. They are also close enough to allow for synchronous replication.

- Asynchronous replication occurs across cloud regions. This increases the distance and latency enough for larger natural catastrophes. Asynchronous means that the faster response time you get for each commit turns into data loss in case of failure.

In this blog post, we’ll explore what a region failure is and why multi-cloud is best suited for high availability. We’ll also discuss why a distributed SQL database, such as YugabyteDB, is an ideal solution for avoiding future region failures.

What is a region failure?

High availability (HA, as synchronous within the region) and disaster recovery (DR, as asynchronous across regions) look simple when considering physical failures. If a plane crashes on a data center, you can expect other AZs to be available. The same goes with floods, fires, tornados, lightning strikes, and earthquakes. This is because cloud providers do not choose the location at random. They look at the geographical characteristics to avoid possible failures that touch multiple AZs. Within one AZ there can be some failure zones, but, again, distance protects.

You may have heard about the OVH fire in Strasbourg, France earlier this year. It touched multiple data centers, but there was no concept of AZs. The four data centers were close to each other, and the main fire had time to propagate to two others. This was a region failure, as well as a DR situation. There, you have a higher recovery time (RTO) and data loss (RPO) than in a HA scenario. This depends on what is available in other regions, such as backups to restore or a standby database to activate. But the decision is easy; there’s no other possibility.

AWS’ us-east-1 region outage

With the location of AZs, you would think that only a large catastrophe can take down all nearby data centers. But this does not take all components into account. Recently, AWS’ us-east-1 region was down for many hours. It was a region outage but not a DR scenario. This was because the disks were still there, housing data. The servers were still running fine. But they could not be accessed because of network issues (i.e., the P for “network partition” in the CAP theorem).

This is completely different from physical damage. Think about asynchronous replication, which is commonly used across regions. You know that you can activate the standby site, but with a few seconds of transactions being lost. Or, you can wait for the primary region to be back, with no data loss at all. Here RPO competes with RTO: do you want the service or all data back? If you have a cross-region, distributed SQL database, you won’t experience this RTO vs. RPO dilemma. A distributed SQL database will have leaders in the primary region, one follower in a nearby one, and the other follower in a distant one. This keeps the service available everywhere with no data loss. The sole consequence of a network partition is higher latency until the primary region comes back.

Why multi-cloud for high availability?

So the most common scenario—for a region failure—is not a geographical catastrophe. There are regions where all cloud providers have data centers. They are different “cloud regions” because they are from different cloud providers, but operate at a shorter distance for lower latency. So, if one is down, the other cloud providers may be up.

In the recent AWS us-east-1 failure, some multi-region configurations experienced issues. Some had problems with non-regional services, like accessing S3 files. Others had trouble accessing the AWS console because fundamental services like IAM, CloudWatch, or DNS were down. In this case, other regions of the same cloud provider may be up, but not fully operational, so a multi-region configuration may not provide HA. However, if a database runs on nodes across two cloud providers, at least one of them will be up. And they’ll show low latency when in the same geographical area.

A multi-cloud provider configuration is the best solution for ensuring high availability over cloud region failures. However, following the AWS us-east-1 outage, some have argued against taking such an approach. Let’s unpack this reasoning.

The myth of high costs and complexity

Data synchronization has a cost in network traffic. Most cloud providers add the egress traffic between AZs to the bill. With all of them, you pay for transfer between regions and to other cloud providers (or your premises). You must carefully consider this and also look at the amount of data transferred. Sending all physical changes is the most expensive (e.g., storage synchronization or database full block log shipping). Logical replication sends only the minimum.

Another reason is the complexity to move the application connection, load balancers, and containers across cloud providers. Any HA should reduce the complexity to a minimum. If the failover is not seamless, it will fail. If the second cloud provider is used only as a standby, you will experience new connection issues, lack of portability, and compatibility. In addition, the application will not continue to run. There’s a good chance the failed region comes back before you can fully restore the services.

Avoiding a region failure with YugabyteDB

YugabyteDB, a popular open source distributed SQL database, sends key-value changes at the DocDB level. It does so using a Raft protocol for synchronous replication, or change data capture (CDC) for asynchronous replication. Compression can also be activated for this network to reduce costs.

In YugabyteDB, adding nodes to an AZ or a region in the cloud provider is easy. All you need to do is specify the names in the placement information.

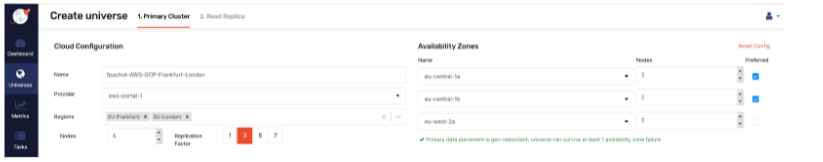

For example, here’s a YugabyteDB primary cluster on Amazon Cloud (with preferred nodes in Frankfurt, Germany across two availability zones):

And here is the placement information from this in the primary cluster:

replication_info {

live_replicas {

num_replicas: 3

placement_blocks {

cloud_info {

placement_cloud: "aws" placement_region: "eu-central-1" placement_zone: "eu-central-1a"

} min_num_replicas: 1

}

placement_blocks {

cloud_info {

placement_cloud: "aws" placement_region: "eu-central-1" placement_zone: "eu-central-1b"

} min_num_replicas: 1

}

placement_blocks {

cloud_info {

placement_cloud: "aws" placement_region: "eu-west-2" placement_zone: "eu-west-2a"

} min_num_replicas: 1

}

}

affinitized_leaders {

placement_cloud: "aws" placement_region: "eu-central-1" placement_zone: "eu-central-1a"

}

affinitized_leaders {

placement_cloud: "aws" placement_region: "eu-central-1" placement_zone: "eu-central-1b"

}

}I have two placement blocks in Frankfurt (eu-central) across two availability zones with leader affinity. This means that each tablet will have its leader and follower, ensuring fast read and write operations. That is, except when one AZ is down, where writes may take longer as the quorum will be acknowledged by the other region. If the whole Frankfurt AWS region is inaccessible, the remaining followers in London (eu-west) have all replicas but may be inconsistent (as the quorum was fully acknowledged in Frankfurt).

Configure YugabyteDB’s xCluster

This is where you can have YugabyteDB’s xCluster configured with bi-directional replication in the opposite configuration: two AZs in London—with preferred leaders—and one AZ in Frankfurt. In theory, you may have some lost transactions in case of failover. But given the latencies, the writes were shipped to the destination before the local quorum (i.e., roundtrip between AZs) was acknowledged. This second cluster is ready for read-write in London in case the Frankfurt region is inaccessible.

It’s also easy to run this other cluster in another cloud provider since all providers are present in London and Frankfurt. Here’s how it looks on Google Cloud:

replication_info {

live_replicas {

num_replicas: 3

placement_blocks {

cloud_info {

placement_cloud: "gcp" placement_region: "europe-west-2" placement_zone: "europe-west-2a"

} min_num_replicas: 1

}

placement_blocks {

cloud_info {

placement_cloud: "gcp" placement_region: "europe-west-2" placement_zone: "europe-west-2b"

} min_num_replicas: 1

}

placement_blocks {

cloud_info {

placement_cloud: "gcp" placement_region: "europe-west-3" placement_zone: "europe-west-3a"

} min_num_replicas: 1

}

}

affinitized_leaders {

placement_cloud: "gcp" placement_region: "europe-west-2" placement_zone: "europe-west-2a"

}

affinitized_leaders {

placement_cloud: "gcp" placement_region: "europe-west-2" placement_zone: "europe-west-2b"

}

}One item to note: some cloud providers share the same data center where they rent a zone. That means it’s important to keep nodes in two availability zones on each side. Creating virtual machines in different cloud providers has no additional complexity.

Conclusion

As discussed, a multi-cloud solution can ensure high availability over cloud region failures when paired with YugabyteDB. With YugabyteDB, a multi-AZ configuration is simple. Plus, this open source database’s geo-distributed architecture is designed to withstand any kind of failure.

You can build your own YugabyteDB-as-a-service on any cloud with Yugabyte Platform, the best fit for mission-critical deployments. It provides provisioning, health-checks, and continuous monitoring. It also offers a purpose-built tool for on-prem deployments and life cycle management of containerized applications. You can get started with a 30-day trial today.

Sign Up for a Free Trial