Orchestrating Stateful Apps with Kubernetes StatefulSets

Kubernetes, the open source container orchestration engine that originated from Google’s Borg project, has seen some of the most explosive growth ever recorded in an open source project. The complete software development lifecycle involving stateless apps can now be executed in a more consistent, efficient and resilient manner than ever before. However, the same is not true for stateful apps — containers are inherently stateless and Kubernetes did not do anything special in the initial days to change that.

Over the last year, we observed an increasing desire among our users to see a distributed database such as YugabyteDB orchestrated by Kubernetes. The user benefit remains the same as that for stateless apps — a uniform and consistent way to run the full stack of the application across the complete development, deployment and production lifecycle. As we started digging deeper into StatefulSets, Kubernetes’ purpose-built controller for stateful apps, we got excited with the rapid progress the community was making in its stabilization. Steady user feedback starting with the Alpha stage in v1.3 (Jul 2016) and the Beta stage in v1.5 (Dec 2016) led to the hardening of a critical set of features, culminating with the Stable stage in v1.9 (Dec 2017).

What’s Special About Stateful Apps?

The first question is to answer is how stateful apps are different than stateless apps. As we will see, the “state” aspect of stateful apps makes orchestration more complex than what the initial Kubernetes controllers were built for.

Persistent Volumes

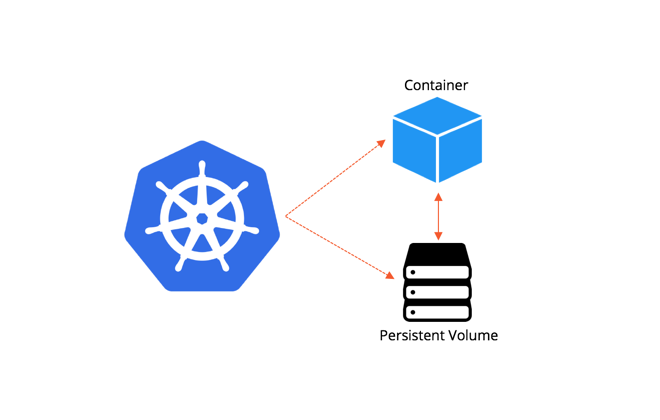

As highlighted in Containerizing Stateful Applications, data volume mapped to a container can be either:

1. Inside the container

2. Inside the host, independent of the container

3. Outside the host, independent of both the host and the container

Stateless applications by definition have no need for long-running persistence and hence usually rely on the first 2 options. However, stateful applications (such as databases, message queues, metadata stores) have to use the 3rd approach in order to guarantee that the data outlives the lifecycle of a container or a host. The above also means that as new containers or new hosts are brought up, we should be able to map them to existing persistent volumes.

Stable Network Identities

There is a need for a stable network ID so that the special roles typically observed in stateful apps can be preserved. E.g., in a monolithic RDBMS such as PostgreSQL or MySQL deployment, there is usually 1 master and multiple read-only slaves. Each slave communicates with the master letting it know the state to which it has synced the master’s data. So the slaves know that “instance-0” is the master and the master knows that “instance-1” is a slave.

Ordered Operations

The RDBMS example also makes it clear that there is an explicit ordering of the various DB instances need to be brought up — the slaves can be brought up only after the master is brought up successfully. This brings up the 3rd important difference between stateful and stateless apps — instances of stateless apps play no special role (i.e. they are exactly the same) and hence, their order of startup, scale-down, upgrade doesn’t matter.

Bringing it All Together with YugaByte DB

By simply reviewing the commands involved in starting a YugabyteDB cluster (aka universe), we can see each of the above needs in practice. YB-Masters, the authoritative source for cluster metadata, have to be started first and each instance has a stable ID of its own. Note that YB-Masters do not play the same role as RDBMS Master instances since they are not involved in the core IO path of the database. YB-TServers, the data node servers, are started thereafter with the IDs of the masters provided as input. Both these sets of servers are mapped to persistent disks for storing their data.

Mapping to Common Kubernetes Controller APIs

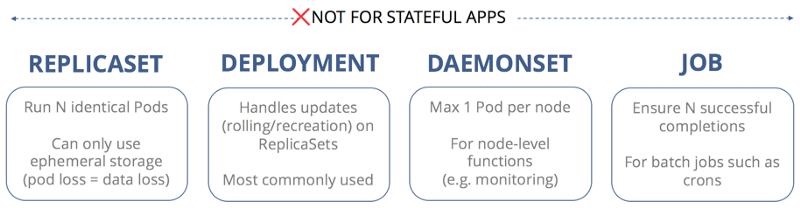

Having established that stateful apps have different needs than that of stateless apps, let’s map these needs to the commonly used Kubernetes controllers.

ReplicaSet — used to run N identical pods but with ephemeral storage only. No support for persistent storage so clearly not a fit for stateful apps.

Deployment — extends the ReplicaSet functionality to add update semantics that allows pod recreation and rolling upgrades. De-facto standard for stateless apps.

DaemonSet — ensure a maximum of 1 pod per node, thus enabling node-level functions such as monitoring using a machine agent.

Job — used for running batch jobs at a pre-determined schedule.

As we can see, none of the above controllers are suitable for running stateful apps. Hence, the need for StatefulSets, a special controller purpose-built for the needs of stateful apps.

Introducing Kubernetes StatefulSets

The Kubernetes StatefulSets API is best understood by reviewing its 4 key features.

1. Ordered operations with ordinal index

Applies to all critical pod operations such as startup, scale-up, scale-down, rolling upgrades, termination.

2. Stable, unique network ID/name across restarts

This ensures that re-spawning a pod will not make the cluster treat it as a new member.

3. Stable, persistent storage (linked to ordinal index/name)

Allows attaching the same persistent disk to a pod even if it gets rescheduled to new node.

4. Mandatory headless service (no single IP) for integrations

Smart clients aware of all pods and connect to any of them without using any kind of independent load-balancer service.

YugabyteDB Deployed as StatefulSets

YugabyteDB can be deployed on Kubernetes StatefulSets in Minikube, Google Kubernetes Engine and Azure Kubernetes Engine environments.

YB-Masters and YB-TServers each have a StatefulSet of their own. App clients connect to YB-TServer using its headless service while admin clients (such as YugabyteDB Admin Console) connect to the YB-Master using its own headless service. The basic StatefulSet-based Kubernetes YAML can be reviewed on GitHub.

Other Examples

PostgreSQL

PostgreSQL’s HA deployment involves running a single master (responsible for handling writes) with 1+ replicas (aka read-only slaves). As shown in the figure, master and the replicas are deployed together in a single StatefulSet. The headless service representing the master is associated to the pod with ordinal index 0. Pods with all non-zero ordinal indexes are connected to a different headless service named “Replica Service”.

Note that while this approach allows easy scaling of the replicas, the loss of the master pod requires manual intervention involving promotion of one of the replicas to a master pod (and also results in loss of data that was not yet synced from the original master to this replica).

Elasticsearch

Elasticsearch data nodes can also be deployed as a StatefulSet working alongside the client nodes and master nodes both deployed as Deployments.

Summary

With the GA of StatefulSets in v1.9, Kubernetes has become a viable solution for orchestrating stateful apps. Additional features such as node local storage once stable (still in Beta in the current v1.10 release) will make Kubernetes a strong candidate for mission-critical, high-performance production environments. Another critical feature for high data resilience is that of pod anti-affinity that ensures that no two pods of the same StatefulSet can be scheduled on the same node. We will review these considerations along with a few more in a follow-up post.