YugabyteDB Community Engineering Update, Tricks and Tips – Oct 18, 2019

Welcome to this week’s community update where we recap a few interesting questions that have popped up in the last week or so on the YugabyteDB Slack channel, the Forum, GitHub or Stackoverflow. We’ll also review upcoming events, new blogs and documentation that has been published since the last update. Ok, let’s dive right in:

Running yb-ctl status does not give node information

Question

AndrewLiuRM over on the forums asked why the yb-ctl command doesn’t give node information when issued after a manual deployment onto a Docker container.

Answer

The yb-ctl status is only meant to be used for local clusters that are created using the yb-ctl command. For manual deployments use:

https://ip of container:7000/

or

https://ip of container:7000/tablet-servers

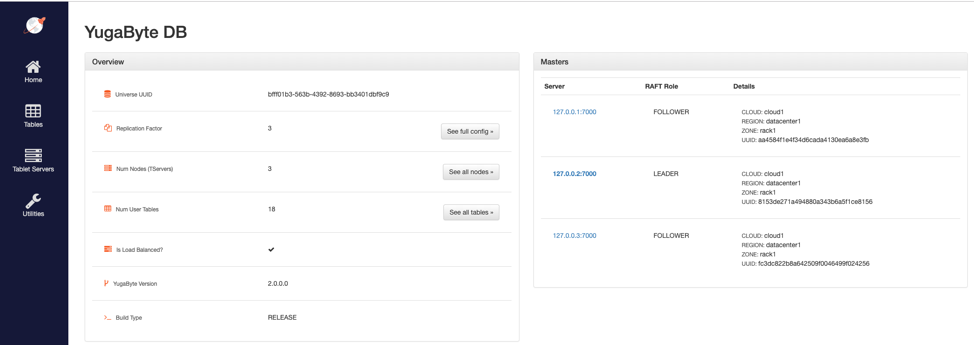

…will allow you to view the nodes in the cluster. You should see a UI similar to the one below.

Adding Primary Key to Existing Table Fails

Question

Jagannath over on the slack chat asked why adding a primary key constraint to a table that already exists failed.

Answer

Currently, you should create the primary key constraint during the CREATE TABLE operation. Adding PKs after the fact is actively being worked on, you can you track the GitHub issue here.

How to Generate UUIDs

Question

RobinB over on Slack asked for a way to generate a uuid in the database.

Answer

Currently you can use:

CREATE EXTENSION IF NOT EXISTS pgcrypto;

and then something like:

SELECT gen_random_uuid();

Step-by-Step: How to Recover a Cluster After a Failure

Question

Noorain Panjwani over on Slack asked for a step-by-step guide on how to recover a cluster when a master and/or tserver fails.

Answer

Assuming we have a N-node setup, with replication factor (RF)=3.

If a node is down, the system will automatically heal and continue to function with the remaining N-1 nodes. If the node is down and doesn’t come back quick enough, and N-1 >= 3, then tablets which are now under-replicated will be re-replicated automatically to get back to RF=3 on the remaining N-1 nodes.

It’s a good idea to have a cron/systemd setup that ensure that the yb-master/yb-tserver process is restarted if it is not running. This handles transient failures (such as a node rebooting or process crash due to a bug/some unexpected behavior).

If the node failure is a permanent failure, for the yb-tserver, simply starting another yb-tserver process on the new node is sufficient. It will join the cluster and the load-balancer will automatically take the new yb-tserver into consideration and start rebalancing tablets to it.

Master Quorum Change: If a new yb-master needs to be started to replace a failed master, the master quorum needs to be updated. Suppose the original yb-masters were n1, n2, n3, and n3 needs to be replaced with a new yb-master on n4. Then you’ll use:

% bin/yb-admin -master_addresses n1:7100,n2:7100 change_master_config REMOVE_SERVER n3 7100

% bin/yb-admin -master_addresses n1:7100,n2:7100 change_master_config ADD_SERVER n4 7100

At this point, the tservers’ in-memory state will automatically learn of the new master after the ADD_SERVER step, and will not need a restart. But you should update the yb-tserver config file which specifies the master addresses to reflect the new quorum (n1, n2, n4). This should be done in order to handle the cases where the yb-tserver restarts at some point in the future.

We also have a guide on how to perform a planned cluster change (such as moving the entire cluster to a brand new set of nodes or machine types.)

New Docs, Blogs, Tutorials, and Videos

New Blogs

- 9 Techniques to Build Cloud-Native, Geo-Distributed SQL Apps with Low Latency

- How to: PostgreSQL Fuzzy String Matching in YugabyteDB

- YugabyteDB Engineering Update – Oct 14, 2019

- 2019 Distributed SQL Summit Recap and Highlights

- The Effect of Isolation Levels on Distributed SQL Performance Benchmarking

- Getting Started with PostgreSQL Triggers in a Distributed SQL Database

- Recapping My Internship at Yugabyte – Jayden Navarro

New Videos

We have uploaded over a dozen videos from this year’s Distributed SQL Summit. You can links to the presentations, slides and a recap of the highlights by checking out, “2019 Distributed SQL Summit Recap and Highlights.”

Upcoming Meetups and Conferences

PostgreSQL Meetups

- Oct 24: Vancouver PostgreSQL Meetup

Distributed SQL Webinars

- Oct 30: Distributed Databases Deconstructed: CockroachDB, TiDB and YugabyteDB

- Nov 6: Developing Cloud-Native Spring Microservices with a Distributed SQL Backend

KubeCon + CloudNativeCon

- Nov 18-21: San Diego

AWS re:Invent

- Dec 2-6: Las Vegas

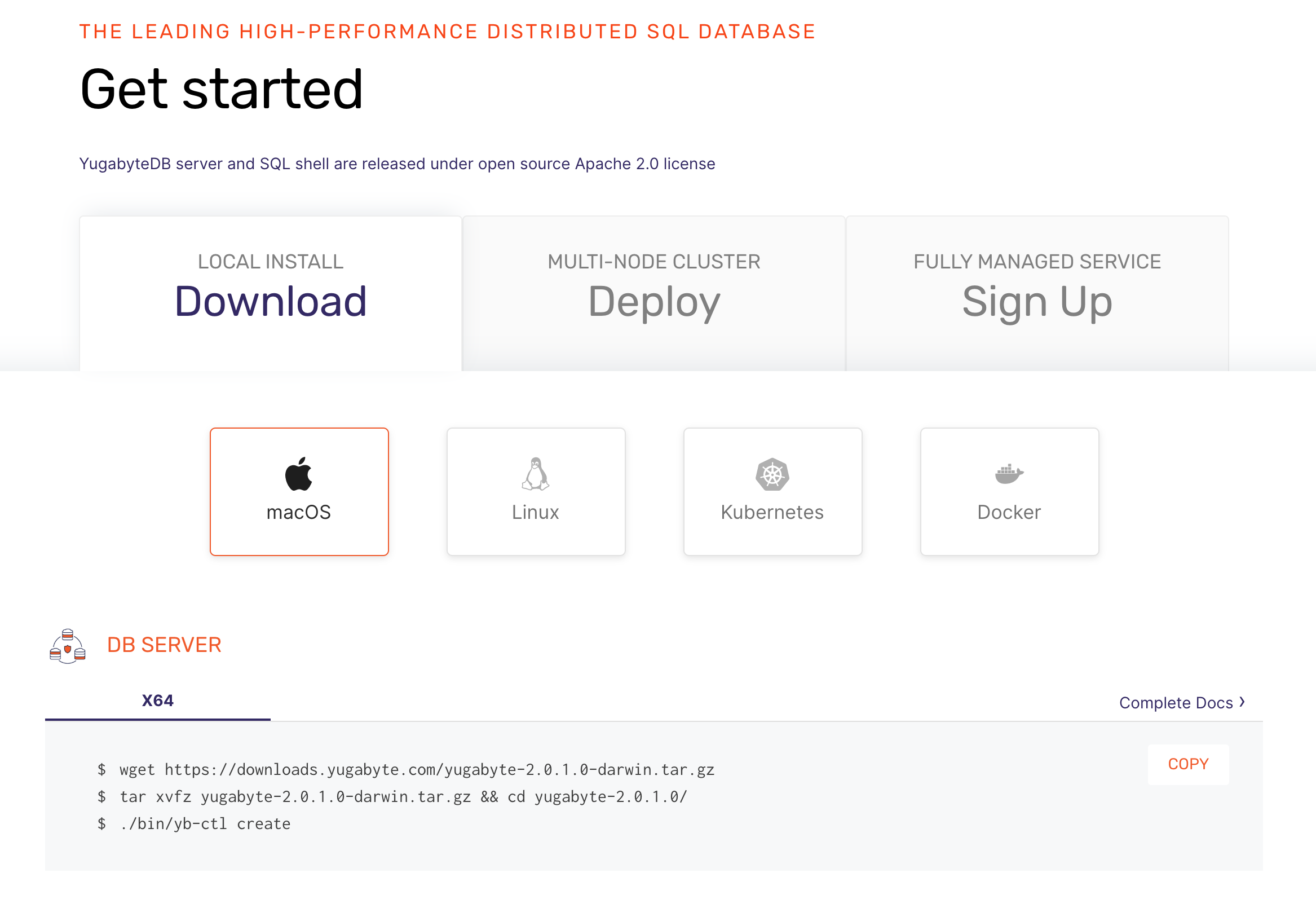

Get Started

Ready to start exploring YugabyteDB features? Getting up and running locally on your laptop is fast. Visit our quickstart page to get started.

What’s Next?

- Compare YugabyteDB in depth to databases like CockroachDB, Google Cloud Spanner and MongoDB.

- Get started with YugabyteDB on macOS, Linux, Docker, and Kubernetes.

- Contact us to learn more about licensing, pricing or to schedule a technical overview.